When personality enters the equation, process management seems to vanish.

Some things just seem to get to me, and one is the concept of “process management.” It’s not that I don’t like or believe in it. Over the years I have spent tons of money successfully working to improve processes, and have seen firsthand the benefit improved process management can yield. There really is a solid return on investment when improvements are made and productivity improves.

But what gets me is that process management seems to only matter when applied to “things” vs. “people.”

Everyone – regardless of industry or job function – loves to tout improvements derived from applying a process improvement to a “thing.” This may include manufacturing equipment – hardware or software – end-products – newly developed or longstanding favorites – and even bricks and mortar (or elimination of same). However, when the process that needs improvement exclusively involves personnel, and someone’s name is identified with said “process improvement,” watch everyone shy away.

By identifying names, I am referring to when the process improvement involves people who work with people (versus equipment), and when the processes that those people should be developing or following are associated with a small number of colleagues, which means those involved are easily identified. Usually, those people work in externally focused departments (sales, purchasing, administrative functions such as HR and accounts payable and receivable). The process management challenge is to improve the level of service, support or value-add that would create a solid relationship in processes that have heavy interpersonal versus machine-driven interaction. And it is not just the supplier/customer relationship. In any situation that involves people – supplier, customer, consultant, employee – friend or foe – when personality enters the equation, process management seems to vanish.

Some examples: When someone evaluates a plating process and comes up with an optimal chemistry, preferred timing and sequence, or improved equipment or configuration, they tout their process management skills and the resulting improvement as a major victory. However, when dealing with the timing and sequence of involving people in, say, periodic capability updates with customers, or the frequency and “configuration” of communication between key suppliers and purchasing, or even just being more visible with key decision-makers at specific customers or suppliers, those involved often shy away from dealing with the situation. Far worse is if there is a problem, almost everyone will assume the ostrich position: put their head in the sand and hope no one notices the deafening silence caused by a lack of process or management.

This is not to say initiatives don’t take place in people-driven processes. But too often these efforts manifest themselves as software-focused “interfaces” or “portals” – to create a cyber impression of involvement and progressive process management so to improve how people are dealt with – without actually helping the managers tasked with dealing with those people. Others will periodically take momentary actions of heroic brilliance led by an employee who tries to assist. Yet, if the action is not embraced with appropriate reward and recognition, and then adopted and implemented as a true process improvement, then it is not truly process management.

When process improvement exclusively involves people, rather than focusing on process improvement, we tend to deal with only the most serious problems, and even then not in the context of process improvement, but in a superficial, expeditious way.

Using the most basic definition, we are all job shops that cannot create demand or inventory product for future customers, but must react to customers’ specific technological as well as volume needs, responding only when they want it. What differentiates us all – for better or worse – is the level of support and service we offer; the relationships we have with our suppliers, and our abilities to pull together diverse subcontract capabilities to satisfy a pressing customer need. In short, what differentiates us is our ability to interact with … people.

And yet, we tend to not focus as much on process improvement in the very areas that could and will best differentiate us. Yes, cutting-edge software or web portals may help, but do they really address what customers want? We might have the most technologically savvy staffs, but if they don’t want – or know how – to interact with customers consistently and effectively, what is their use? We may be able to identify customers’ or suppliers’ problems, but if we explicitly or implicitly cop an attitude that communicates that we are not the ones who should help, how can our role be viewed as value-add?

While most companies have undergone extraordinary measures to improve internal design and manufacturing process management, I would bet that if the folks at ISO really focused on processes that involve suppliers and customers, most companies would not qualify for certification. Which brings me to the importance of embracing all the processes that link your company with the people to which you are most trying to provide value: customers. Only by making sure you apply the best available processes and people management so you are consistent in how well you treat all the related people – suppliers through customers – can you begin to focus on providing the value-adding process management they all seek.

Disney and Apple seem to get this. Disney parks are consistent in their customer focus and process management to make sure that all customers (“guests”) feel they had the best experience and received the greatest value for their money. Ditto with Apple; its retail stores emphasize service, support and the total “Apple” experience, and hence, the value-add of buying its products and much of its brand loyalty.

In our industry, the highest level of value-add is in supporting engineers who create cutting-edge technology with the often-invaluable manufacturability input we can offer. It’s about making the buying experience easy and seamless for that engineer or the buyer who is under pressure to cut all costs. Value-add is building the personal relationship of being the company that can “make it happen.” And yet, when resources are committed, investments made, and HR reviews given, the people side of process management is all too often the area ignored or neglected.

If only managers and workers realized how important their attitude, commitment and over-the-top involvement means to the bottom line of their customers, as well as their colleagues. Going above and beyond is great, but when that attitude, skill set and commitment are part of the process management – process improvement – then true value-add will be provided to customers and suppliers.

Peter Bigelow is president and CEO of IMI (imipcb.com); pbigelow@imipcb.com. His column appears monthly.

Shipments and revenues should skyrocket in 2010, leaving the spectres of 2009 behind.

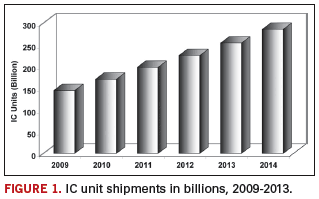

IC revenue will stage a comeback this year, growing 18.8%, with unit growth a tick lower at 18%. Both figures are considerably better than the 8.8% decline in revenue and 6.9% decline in shipments in 2009.

DRAMs are anticipated to be the largest growth area for ICs, with revenues up 40% in 2010. Numerous analog chips, including regulators and references, computer, communications, automotive, and industrial applications; special purpose logic chips, including consumer, computer, communications, and automotive; flash, EEPROM, 32-bit MCU, and standard cell and PLD chips will see revenue growth rates in excess of 15%.

Shipments should continue to climb at least through 2013, according to NVR data. Unit growth should top 100 billion over the four-year span of 2009 to 2013 (Figure 1).

What’s driving the recovery? Low interest rates, low oil prices, and the stimulus packages instituted around the world are all contributing to a stabilizing economy and upturn. Purchases were less than the replacement market in 2009, and pent-up demand is pulling the market in a positive direction.

Cellphones, particularly high-end smartphones, will see high growth rates. Smartphones are gaining in popularity and becoming a larger piece of the cellphone pie. Anything handheld and somewhat affordable that keeps us connected to the rest of the world seems to be doing well. New product introductions such as Apple’s latest iPhone are hot topics; the iPad is expected to do well, and Research in Motion’s Blackberry has been doing well for some time.

Netbook computers, with prices as low as $200 during holiday sales, and notebook computers are driving up IC demand. Other high growth areas include 3-D and digital TVs, DSL/cable modems, flash drives, memory cards, set-top boxes, digital cameras, automotive, and an assortment of audio applications.

The economy is stabilizing, which is easing fears of spending on consumer goods. The housing market, which took down the economy by taking the credit markets with it, is stabilizing, and the ratio of income-to-housing expenditures is more balanced than it was previously.

The automotive market, host for numerous ICs, fell substantially during the downturn. This market did benefit from the cash-for-clunkers program, although automotive sales receded again after the program ended. But it became a booster to spending, which helped. And automotive is expected to turn up in 2010 and beyond, particularly in areas such as China. Overall, spending is higher now than it was in the depths of 2009, and that is what is pulling us up and out of the sloth of 2009 and will carry us to a more positive future.

Sandra Winkler is senior industry analyst at New Venture Research Corp. (newventureresearch.com); swinkler@newventureresearch.com. This column runs bimonthly.

The sudden unintended acceleration problems in Toyota’s vehicles have touched off a firestorm of controversy over the cause(s). Accusations of problems with the electronics throttle system were quickly followed by emphatic denials by the automaker. Then on Feb. 23, Toyota’s top US executive testified under oath before US Congress that the automaker had not ruled out electronics as a source of the problems plaguing the company’s vehicles. Subsequently, other company officials denied that was true.

To confuse matters more, a professor of automotive technology claims to have found a flaw in the electronics system of no fewer than four Toyota models that “would allow abnormalities to occur.” Testifying before Congress, David W. Gilbert, a Ph.D. with almost 30 years’ experience in automotive diagnostics and troubleshooting, said the trouble locating the problem’s source could stem from a missing defect code in the affected fleet’s diagnostic computer.

Prof. Gilbert said his initial investigation found problems with the “integrity and consistency” of Toyota’s electronic control modules to detect potential throttle malfunctions. Specifically, Prof. Gilbert disputed the notion that every defect would necessarily have an associated code. The “absence of a stored diagnostic trouble code in the vehicle’s computer is no guarantee that a problem does not exist.” Finding the flaw took about 3.5 hours, he added. (A video of Prof. Gilbert’s test at his university test track is at www.snotr.com/video/4009.)

It took two weeks for the company to strike back. In early March, Toyota claimed Prof. Gilbert’s testimony (http://circuitsassembly.com/blog/wp-content/uploads/2010/03/Gilbert.Testimony.pdf) on sudden unintended acceleration wasn’t representative of real world situations. In doing so however – and this is important – Toyota made no mention (at least in its report) about Prof. Gilbert’s more important finding: the absence of the defect code. Was Toyota’s failure to address that an oversight? Or misdirection?

Second in concern only to the rising death toll is Toyota’s disingenuous approach to its detractors. Those who follow my blog realize I’ve been harping on this for several weeks. But why, some readers have asked.

The reason is subtle. Electronics manufacturing rarely makes international headlines, and when it does, it’s almost always for the wrong reasons: alleged worker abuses, product failures, (mis)handling of potentially toxic materials, and so on. The unfolding Toyota story is no different.

Yet it’s important the industry get ahead of this one. Planes go down over oceans and their black boxes lost to the sea. Were the failures brought about by conflicts between the cockpit navigational gear and on-board satellite entertainment systems? When cars suddenly accelerate, imperiling their occupants, was it a short caused by tin whiskers that left the driver helpless? It’s vital we find out.

In the past four years, NASA’s Goddard Space Flight Center, perhaps the world’s premiere investigator of tin whiskers, has been contacted by no fewer than seven major automotive electronics suppliers inquiring about failures in their products caused by tin whiskers. (Toyota reportedly is not one of them, but word is NASA will investigate the incidents on behalf of the US government.) These are difficult, painful questions, but they must be examined, answered, and the results disseminated. Stonewalling and misdirection only heighten the anxiety and fuel accusations of a cover-up.

We as an industry so rarely get the opportunity to define just how exceedingly difficult it is to build a device that works, out of the box, as intended, every time. A Toyota Highlander owner has a satellite TV monitor installed into his dash, then finds certain controls no longer work as designed. A Prius driver’s car doesn’t start when he’s using his Blackberry. It’s impossible for an automotive company to predict and design for every single potential environmental conflict their models may encounter.

The transition to lead-free electronics has been expensive and painful for everyone – even for those exempt by law, because of the massive infiltration of unleaded parts in the supply chain. And despite no legal impetus to do so, some auto OEMs have switched to lead-free. Moreover, to save development time costs, automakers are quickly moving to common platforms for entire fleets of vehicles, dramatically exacerbating the breadth of a defect. We do not yet know if lead-free electronics is playing a role in these catastrophic failures. But if tin whiskers or some other electronics-related defect are the cause, or even a cause, of these problems, we need to know. If we are inadvertently designing EMC in, we need to know.

Toyota’s PR disaster could be a once-in-a-lifetime chance for the electronics industry to reposition itself. What we build is important and life-changing. This is a chance to take back our supply chains from those whose single purpose is cost reduction, and to redefine high-reliability electronics as a product worth its premium. And it’s a chance to explore whether wholesale industry changes are conducted for the good of the consumer, or for short-term political gain. It’s a tragic reminder that science, not opinion, must always win, and that moving slowly but surely is the only acceptable pace when designing and building life-critical product.

‘Virtually’ great. A big “thank you” to the 2,600-plus registrants of this year’s Virtual PCB conference and exhibition. It was the best year yet for the three-year-old show, confirming once again that there’s more than one way for the industry to get together. The show is available on-demand through May 4; be sure to check it out at virtual-pcb.com.

Setting up the proper feedback loop saves time and cost in re-spins.

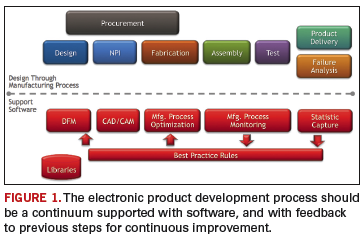

When considering electronics product development, design and manufacturing often are thought of as separate processes, with some interdependencies. The processes seldom are considered as a continuum, and rarely as a single process with a feedback loop. But the latter is how development should be considered for the purposes of making competitive, fast-to-market, and lowest cost product.

Figure 1 shows the product development process from design through manufacturing to product delivery. This often is a segmented process, with data exchange and technical barriers between the various steps. These barriers are being eliminated and the process made more productive by software that provides for better consideration of manufacturing in the design step, more complete and consistent transfer of data from design to manufacturing, manufacturing floor optimization, and the ability to capture failure causes and correct the line to achieve maximum yields. The goal is to relay the failure causes as improved, best-practice DfM rules and prevent failures from happening.

The process starts with a change in thinking about when to consider manufacturing. Design for manufacturability should start at the beginning of the design (schematic entry) and continue through the entire design process. The first step is to have a library of proven parts, both schematic symbols and component geometries. This forms the base for quality schematic and physical design.

Then, during schematic entry, DfM requires communication between the designer and the rest of the supply chain, including procurement, assembly, and test through a bill of materials (BoM). Supply chain members can determine if the parts can be procured in the volumes necessary and at target costs. Can the parts be automatically assembled or would additional costs and time be required for manual operations? Can these parts be tested using the manufacturer’s test equipment? After these reviews, feedback to the designer can prevent either a redesign or additional product cost in manufacturing.

The next DfM is to ensure the layout can be fabricated, assembled and tested. Board fabricators do not just accept designer data and go straight to manufacturing. Instead, fabricators always run their “golden” software and fabrication rules against the data to ensure they will produce boards that are not hard failures, and to determine adjustments to avoid soft failures that could decrease production yields.

So, for the PCB designer, part of the DfM is to use this same set of golden software and rules often throughout the design process. This practice not only could prevent design data from being returned from the fabricator or assembler, but, if used throughout the process, could ensure that design progress is always forward, with no redesign necessary.

A second element in this DfM process is the use of a golden parts library to facilitate the software checks. This library contains information typically not found in a company’s central component libraries, but rather additional information specifically targeted at improving a PCB’s manufacturability. (More on this later.)

When manufacturing is considered during the design process, progress already has been made toward accelerating new product introduction and optimizing line processes. Design for fabrication, assembly and test, when considered throughout the design function, helps prevent the manufacturer from having to drastically change the design data or send it back to the designer for re-spin. Next, a smooth transition from design to manufacturing is needed.

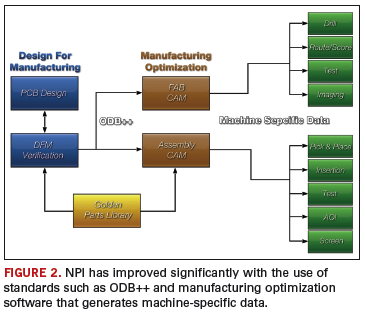

As seen in Figure 2, ODB++ is an industry standard for transferring data from design to manufacturing. This standard, coupled with specialized software in the manufacturing environment, serves to replace the need for every PCB design system to directly produce data in the formats of the target manufacturing machines.

Time was, design systems had to deliver Gerber, machine-specific data for drill, pick-and-place, test, etc. Through standard data formats such ODB++ (and other standards such as GenCAM), and available fab and assembly optimization software, the manufacturing engineers’ expertise can be capitalized on. One area is bare board fabrication. If the designer has run the same set of golden rules against the design, there is a good chance no changes would be required, except ones that might increase yields. This might involve the manufacturer spreading traces, or adjusting stencils or pad sizes. But the risk here is the manufacturer does not understand tight tolerance rules and affects product performance. For example, with the emerging SERDES interconnect routing that supports data speeds up to 10 Gbs, matching trace lengths can be down to 0.001˝ tolerance. Spreading traces might violate these tolerances. It is important the OEM communicate these restrictions to the manufacturer.

From an assembly point of view, the manufacturer will compare received data (pad data and BoM) to a golden component library. Production engineers rely on this golden library to help identity BoM errors and footprint, or land pattern mismatches prior to first run. For example, the actual component geometries taken from manufacturer part numbers in the BoM are compared to the CAD footprints to validate correct pin-to-pad placement. Pin-to-pad errors could be due to a component selection error in the customer BoM. Although subtle pin-to-pad errors may not prevent parts from soldering, they could lead to long-term reliability problems.

Designers have DfT software that runs within the design environment and can place test points to accommodate target testers and fixture rules. Final test of the assembled board often creates a more complex challenge and usually requires a manufacturing test engineer to define and implement the test strategy. Methods such as in-circuit or flying probe testing require knowledge of test probes, an accurate physical model of the assembly (to avoid probe/component collisions), and the required test patterns for the devices.

Manufacturing Process Optimization

Even as a new assembly line is configured in preparation for future cost-efficient production, for high-mix, high-volume or both, simulation software can aid in this process. Many manufacturers are utilizing this software to simulate various line configurations combined with different product volumes and/or product mixes. The result is an accurate “what if?” simulation that allows process engineers to try various machine types, feeder capacities and line configurations to find the best machine mix and layout. Using line configuration tools, line balancers, and cycle time-simulators, a variety of machine platforms can be reviewed. Once the line is set up, this same software maintains an internal model of each line for future design-specific or process-specific assembly operations.

This has immediate benefits. When the product design data are received, creating optimized machine-ready programs for any of the line configurations in the factory, including mixed platforms, can be streamlined. A key to making this possible lies in receiving accurate component shape geometries, which have been checked and imported from the golden parts library. Using these accurate shapes, in concert with a fine-tuned rule set for each machine, the software auto-generates the complete machine-ready library of parts data offline, for all machines in the line capable of placing the part. This permits optimal line balancing, since any missing part data on this or that machine – which can severely limit an attempt to balance – are eliminated. The process makes it possible to run new products quickly because missing machine library data are no longer an issue. Auto-generating part data capability also makes it possible to quickly move a product from one line to another, offering production flexibility.

Programming the automated optical inspection equipment can be time-consuming. If the complete product data model – including all fiducials, component rotations, component shape, pin centroids, body centroids, part numbers (including internal, customer and manufacturer part numbers), pin one locations, and polarity status – is prepared by assembly engineering, the product data model is sufficiently neutralized so that each different AOI platform can be programmed from a single standardized output file. This creates efficiency since a single centralized product data model is available to support assembly, inspection and test.

Managing the assembly line. Setting up and monitoring the running assembly line is a complex process, but can be greatly improved with the right software support. Below is a list of just a few elements to this complex process:

- Registering and labeling materials to capture data such as reel quantity, PN, date code and MSD status.

- Streamlining material preparation and kitting area per schedule requirements, plus real-time shop floor demand, including dropped parts, scrap and MSD constraints.

- Feeder trolley setup (correct materials on trolleys).

- Assembly machine feeder setup, verification and splicing.

- Manual assembly, and correct parts on workstations.

- Station monitoring, capture runtime statistics, efficiency, feeder and placement errors and OEE calculations.

- Call for materials to avoid downtimes.

- Tracking and controlling production work orders to ensure visibility throughout the process.

- Enforcing the correct process sequence is followed, and any test or inspection failure is corrected to “passed” status before product proceeds.

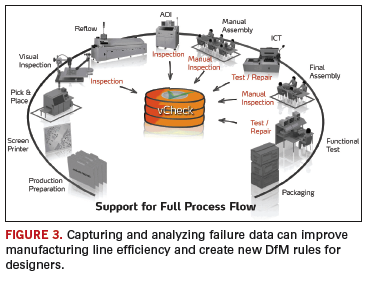

Collecting failure data. It is inevitable some parts will fail. By capturing and analyzing these data, causes can be determined and corrected. Figure 3 shows certain areas where failures are diagnosed and collected using software. One benefit of software is the ability to relate, in real-time, test or inspection failures with the specific machine and process parameters used in assembly and the specific material vendors and lot codes used in the exact failure locations on the PCB.

Earlier, a set of DfM rules in the PCB design environment that reflects the hard and soft constraints to be followed by the designer was discussed. If these rules were followed, we could ensure that once design data reached manufacturing, they would be correct and not require a redesign. What we did not anticipate was that producing a product at the lowest cost and with the most efficiency is a learning process. We can learn by actually manufacturing this or similar designs, and determine what additional practices might be applied to DfM to incrementally improve the processes.

This is where the first feedback loop might help. If we capture data during the manufacturing process and during product failure analysis, this information can be used to improve DfM rule effectiveness. Continuous improvement of the DfM rule set based on actual results can positively influence future designs or even current product yields.

The second opportunity for feedback is in the manufacturing process. As failures are captured and analyzed (Figure 3), immediate feedback and change suggestions can be sent to the processing line or original process data models. Highly automated software support can reconfigure the line to adjust to the changes. For example, software can identity which unique machine feeder is causing dropped parts during placement. In addition, an increase in placement offsets detected by AOI can be immediately correlated to the machine that placed the part, or the stencil that applied the paste, to determine which tool or machine is in need of calibration or replacement. Without software, it would be impossible to detect and correlate this type of data early enough to prevent yield loss, rework or scrapped material.

Design through manufacturing should be treated as a continuum. One starts with the manufacturer’s DfM rules, followed and checked by the designer’s software, the complete transfer of data to the manufacturer using industry-recognized standards, the automated setup and optimization of the production line, the real-time monitoring and visibility of equipment, process and material performance, and finally the capture, analysis and correlation of all failure data. But the process does not end with product delivery, or even after the sale support. The idea of continuum is that there is no end. By capturing information from the shop floor, we can feed that to previous steps (including design) to cut unnecessary costs and produce more competitive products.

John Isaac is director of market development at Mentor Graphics (mentor.com); john_isaac@mentor.com. Bruce Isbell is senior strategic marketing manager, Valor Computerized Systems (valor.com).

Ed.: At press time, Mentor Graphics had just completed its acquisition of Valor.

Bringing tutorial-level instruction to designers’ desktops.

Last month UP Media Group produced our third Virtual PCB trade show and conference for PCB design, fabrication and assembly professionals. Every year the event has grown and shown more promise as a format for bringing together the industry.

We originally started researching virtual trade shows in the late 1990s, but the technology was just not ready. There just was no platform for doing a virtual show in a way that we felt would appeal to our audience.

About four years ago, things changed. Editor-in-chief Mike Buetow called our attention to a company that had a good grasp of what we needed. For those of you who don’t know Mike, he is a thorough and hard-charging editor, with a good handle on most areas of the fabrication and assembly sides of the printed circuit board market. Once Mike set us on the path, UPMG’s Frances Stewart and Alyson Skarbek turned the concept of Virtual PCB into a must-attend event. This year we had a record registration of over 2,600 people from literally every corner of the globe. Those who missed the two-day live event can view the on-demand version, available through May 4.

I take this as another indicator of the power of the Internet. And though we at UPMG are, at heart, print kind of people, we realize the Internet has a huge and still-evolving role to play in how we interact with our readers and advertisers.

This leads me to our latest project. The PCB Design Conferences have always been one of my favorite projects. As an old PCB designer at heart, I realized from my own experience how much we need to learn about every aspect of design, and to stay in touch with technology that sometimes changes almost daily. A couple months ago, a friend turned me on to a software platform that allows us to take the virtual experience to a new level. This new project is called Printed Circuit University. PCU’s primary mission is to help PCB designers, engineers and management stay abreast of technology and techniques. We’ll accomplish this through short flash presentations, webinars, white papers, resource links and blogs by some of the most interesting people in the industry. You’ll be able to post questions for peers to comment, and share experiences and opinions on just about any subject that has to do with circuit boards.

Then there is the Design Excellence Curriculum. The DEC is a program we developed around 15 years ago for the PCB Design Conference. Over the years, thousands of PCB designers and engineers have taken the courses and furthered their knowledge of specific areas of PCB design. Today, the Web allows us to reach a greater number of people than ever, so we’ve decided to bring the DEC to Printed Circuit University.

How does it work? Think of a college curriculum where to get a degree in a specific subject you complete core classes like English and math that round out your general knowledge, and then follow a field of study that builds knowledge in the area you want to pursue. Core classes are on subjects that build the foundation for what every good designer should know: PCB fabrication, assembly and test; dimensioning and tolerances; laminates and substrates; electrical concepts of PCBs.

After passing the core courses, you’ll be able to choose from a series of classes on specific subjects such as flex design, signal integrity, RF design, advanced manufacturing, packaging and a host of others designed to build your knowledge in that area. After the core classes, a person can study as many fields as they want, to gain more and more knowledge of all types of PCB design.

The platform we’ve settled on for PCU is the same used by universities and online learning institutions such as Penn State, Tennessee Tech, University of Iowa and many more. The platform establishes a real-time, online classroom where you can see the instructor and ask questions. You’ll be able to view presentation materials, and the instructor can even switch to a white board view to illustrate a point. Sessions will be archived for review at any time, and when you have completed a course of study, you’ll take an exam to demonstrate that you were listening in “class.”

We expect to launch Printed Circuit University at the end of June, so stay tuned. If you have comments or questions, please email me. In the meantime, stay in touch and we’ll do the same.

Pete Waddell is design technical editor of PCD&F (pcdandf.com); pwaddell@upmediagroup.com.

Merger mania strikes again. Will it play out differently this time?

Just when you thought it was safe, along comes déjà vu all over again!

In so many ways, our industry looks much different as we embark on the second decade of the “new millennium” than it did in the last decade of the past one. Fewer companies are competing, and there is a very different global geographic distribution. Quality and on-time delivery – every company’s means for dramatic improvement a decade ago – are no longer differentiators, as everyone has dramatically improved and performs equally. Technology itself has marched along so quickly that what was “cutting-edge” a decade ago would be considered “commodity” today. But in one way the 1990s are alive and well: Merger mania has once again struck industry boardrooms.

For those who may not remember (or who wisely forgot!), in the mid 1990s a buyout group began a rollup of smaller (albeit what in today’s world would be considered large) companies into what is now Viasystems. On the heels of this business model, and with the encouragement of some of the then-brightest Wall Street analysts who covered our industry, others followed suit. Remember Hadco and Praegitzer? They were caught up in the mania, as was DDI, Altron, Continental Circuits (the Phoenix version), Coretec and a host of much smaller and all-but-forgotten companies.

Like many, I have fond memories of the halcyon ’90s. And I’m not necessarily against mergers; in fact, if someone came along with a boatload of money, I too might sell in the proverbial New York minute. But looking at the carnage, and considering the cost vs. value of those transactions, I have to ask, “What are you guys thinking?!”

If the mantra of the ’90s was quality and on-time delivery, the mantra of the new millennium has been “value,” and it takes some stretch to understand how most mergers create value. Early in my corporate career, I was responsible for “business planning,” which included mergers and acquisitions. Being with a Fortune 100 corporation, we sold a lot of businesses that no longer fit with our ever-changing corporate “vision” of businesses – usually selling poorly managed or neglected facilities.

We never seemed to do well buying other companies either. Too often after closing a deal, we would soon find that the culture, capability and claimed strengths of what we had just bought weren’t quite as advertised. More than a few acquired facilities quickly made their way to the list of poorly managed or neglected facilities that were sold! The exceptions were the few cases where the acquired facility filled a specific niche, or where we could shut down the facility and assimilate the business into an existing, better-managed facility. My take from a few years buying and selling was that, once all the dust settled from the deal, rarely was any value gained.

Looking back at the volumes of merger activity, I believe the same was true: little or no real value resulted from all the deals. Surely most companies actively in the hunt for deals are no longer in business. More telling, the capacity footprint is a shadow of its former self, both for the companies involved in those mergers and the industry as a whole.

What is different this time? Instead of the heady, egocentric hype of last decade, this round has been lower key, keeping with the economic mood of the times. In some ways today’s transactions appear more like a hybrid of Jack the Ripper meets Pac Man. But the results appear headed to the same conclusion, which is to say that little, if any real value will result from the (sizable) investments made.

This brings me back to the déjà vu aspect of today’s merger environment. Several of the megadeals have involved the same companies who lost so much the last time they forayed into the M&A world. You would think they would have learned that as attractive as an acquisition may appear, the end-results don’t always warrant the time, talent and treasure initially invested and subsequently required. More to the point, in an industry that has a voracious appetite for investment in technology and the capital equipment to produce it, maybe money can and should be better spent building the competence of a company’s technology franchise in order to build value.

Customers are searching for technology solutions – answers – for the next generation of product. Building value requires being able to invest in and develop technology. Equally, as the equipment used by many in our industry is getting long in the tooth, investing in more, older equipment and infrastructure via an acquisition heightens the risk.

Some may say that merging large companies will increase value by consolidating excess capacity or reducing the unsustainable “desperation” pricing that sometimes results when weaker companies make last-ditch efforts to fill plants. However, if those involved are that weak, they eventually will founder, and the same result achieved with no investment required.

I do hope this time around, these mergers have a happier ending. In each case, hopefully the acquiring company really needs additional capacity and capability, and the corporate culture fits with their needs and actually increases value for all.

But we should keep in mind the companies that survived previous downturns were, and are, ones that regardless of size or location have remained focused on building value by satisfying customer needs. And customers need technology development and solutions for tomorrow’s products. The greatest single value-adding strategy any company can follow is to stick to its knitting and invest in developing the technology and capability to service customers and their markets. PCD&F

Peter Bigelow is president and CEO of IMI (imipcb.com); pbigelow@imipcb.com. His column appears monthly.