The right test schedule may mean more steps in process, but fewer overall.

A good Lean strategy looks at all elements of the manufacturing process, including how a product is to be tested. From our perspective, ensuring a product is manufactured correctly through electrical test verification is where many designers and manufacturers fail during front-end planning. Test pad locations, for example, are getting tighter and smaller, and many companies aren’t designing for full in-circuit test coverage for this reason. This drives manufacturers to implement and rely more on optical inspection rather than full coverage electrical test. From a Lean perspective, this is a negative trend because ICT is actually the fastest, most actionable way to ensure board quality.

While AOI may catch workmanship defects, it won’t catch defective components. According to an Agilent study, analysis of manufacturing defect root causes suggests 10-15% of defects are attributable to nonfunctioning parts or defective materials versus assembly-process related. We have seen similar statistics with our manufacturing defects trends. So, in addition to reduced throughput, lack of a robust electrical test schedule, such as ICT, can allow material-related defects to slip through the process and to the end-customer.

In optimizing products for Lean manufacturing, EPIC’s process includes design for testability recommendations. First, products are analyzed for design for manufacturing using a rating system that prioritizes recommendations (“DfX for Lean Part II,” Circuits Assembly, May 2008). Then the team looks at the test access restrictions that hinder reliable ICT implementation. Some internal recommended test guidelines include:

- Consider boundary scan if test point availability is an issue. There must be test points on the JTAG pins to use boundary scan.

- All unused leads on devices and connectors should have a test pad.

- All digital IC control and enable pins connected to power or ground should connect via resistor.

- Every net must have a test point.

- Where possible, test points should be distributed evenly across the PCB to equally distribute test pin force across the PCB to minimize board flex.

- Test pads should be located on the lowest component height side of the board.

- EPIC-preferred test pads are 0.040˝ round. Those smaller than 0.032˝ can expect higher test fixture costs, and lower contact reliability in production.

- EPIC-preferred test pad C-C spacing is >/= 0.100˝ (minimum 0.050˝).

- EPIC-standard test pad center to board edge spacing is >0.100˝ (minimum 0.100˝).

A properly designed PCB that permits full coverage access according to these guidelines will result in the lowest installation and operational cost of manufacturing test.

One option for improving ICT coverage is boundary scan, or adding JTAG features into the ICT platforms. Integrating these methods of test in the long run are far less expensive than no test access at all.

Smaller, denser designs push ICT manufacturers to improve ways of reliably contacting the smaller test points. Guided probe fixtures are one example. These fixtures demand a higher price, can be more costly to maintain, and are less reliable than ICT fixtures designed for PCBs that follow recommended guidelines. Each of these points negatively impacts manufacturing cost.

When DfT recommendations cannot be followed to permit full ICT test access, we perform electrical test coverage mapping to determine the best combination of inspection and test to ensure customer quality goals. If limited ICT access is available, this information is analyzed to determine which components are not tested or verified electrically by ICT. When functional test is also used, the untested parts list from ICT will then be compared to the functional test to assess the complete electrical test coverage schedule. The final untested list is then evaluated to determine if manual or optical inspection is necessary to ensure assembly conformance to the specifications. The method of inspection is predicated on the most efficient method in terms of inspection throughput.

Optical inspection strategy. When optical inspection is required, EPIC has chosen to create centralized inspection test centers that include both AOI and automated x-ray inspection, rather than embed AOI/AXI equipment on each line. Products requiring AOI/AXI run through the AOI/AXI test center. Those that do not require inspection go to electrical test. This strategy minimizes capital equipment expense and floor space requirements, as multiple lines feed a single center.

Standardized functional test platforms. A key portion of our Lean strategy has been standardized equipment platforms throughout all factories. While not a new concept in placement and ICT equipment, in this case, it is also extended to functional test platforms. We began developing our own functional test platforms in the 1990s. They use a single software tool and change fixtures for each product. There are variations such as a slide line design that permits volume automated testing of specific products. Operators become versed on the functional platform and common software, so minimal training is required as products are added. Test technology upgrades can be applied across all products.

Additionally, functional testing is designed to mimic product form, fit and function exactly to better correlate with customer data. RF testing, integrated vision testing and LCD or display verification are incorporated in the standard functional test platform. At the IC level, a robust functional test can catch issues that electrical test will not. For example, functional test is required to fully test audio or RF features in an IC.

Basic philosophical principles applied in designing the standardized platform included:

- Modular systems to permit faster scalability as production volume increases.

- Design platforms to enable quick product changeover for top- and bottom-side probing.

- Integrate functional test into production flow to eliminate the possibility of shipping untested products.

- Load/unload process should minimize operator time.

- Include paperless repair tracking of failures.

- When volumes dictate, systems should permit multiple product testing side-by-side, with controlled binning systems.

Customers have multiple options when it comes to designing for and selecting a test strategy. At EPIC, if the product design permits it, ICT is designed as a first priority for the most efficient test process. When impossible, specialized fixturing, gages, optical inspection or functional test are used in combination to ensure a complete test schedule is implemented.

From a Lean perspective, test is an area with many opportunities for improvement. Yet once a product is designed, redesigning for test may not be practical, so inefficiencies remain. Thorough analysis of test strategy during product development and interfacing with the manufacturing teams will pay dividends throughout the product lifecycle in on-time delivery, quality, and process cost.

Chris Munroe is director of engineering at EPIC Technologies (epitech.com); chris.munroe@epictech.com.

Over 20 years, cleaning chemistries have evolved from blasts of solvents to intermolecular interactions.

From solvent replacements in the 1990s to modern chemistries, the term “reactivity” has been used synonymously with the level of cleaning performance. In other words, higher pH meant better flux removal, and PH values between 8 and 10 were considered “moderately reactive.” While with traditional surfactants this simplification held true, the emergence of modern cleaning agents began to include other chemical pathways to flux removal. Today’s performance of aqueous cleaning agents hinges on previously unknown reaction pathways that in turn permit the complete removal of alkalinity in the product.

Now, neutral – neither acidic nor alkaline – product technologies offer cleaning chemistries new capabilities. With the implementation of the Montreal Protocol, PCB cleaning changed from a bulk chemistry supply to a specialized, engineered solution proposition. Looking back at the products used, we can reminisce and marvel at the progress made.

Alkalinity has been synonymous with reactivity since the early 1990s. The world has changed! Starting with the first direct solvent-based replacements in the early 1990s, the industry adapted and built a process around these “new” products. Companies developing these technologies were able to collaborate directly with the users. It did not take long, however, for the shortcoming of solvents and their impact on cost and efficiency of the overall process to limit the cleaning step. Assemblers also started to ask for water-based solutions. As a result, the first generation of aqueous products entered the market. They showed high alkalinity and low levels of organic constituents. Although these products relieved some limitations of solvents, they created other process issues. For example, the cleaning performance could not be compared to solvent-based products. They also offered a number of material compatibility issues as the alkalinity affected the quality of the products being cleaned. Due to their limited ability to solubilize contamination, the bath life of these products was very short, and the process became maintenance heavy.

In the late 1990s, the cleaning industry began to introduce “modern biphasic” product technologies. They were revolutionary as solvent- and surfactant-based advantages were combined, without many of the respective disadvantages. Bath life was now the term that changed the face of process costs. These products were able to precipitate contamination from the medium, and a bath life of 6 to 8 months was possible. It meant significant process savings: The cost per cleaned part was reduced to a fraction of what it had been for surfactant- and solvent-based alternatives.

These new emulsion-type products also were able to hold their own with solvents and far exceeded the performance of traditional surfactant-based products. Interestingly, they also showed these elevated cleaning performance levels at concentrations of 10 to 15%. Previously, users had been informed that a 20 to 30% concentration was necessary to ensure full cleaning ability. Lower concentrations only slightly improved the compatibility with PCB assembly materials, such as anodized or blank metal surfaces (as pH is on a logarithmic scale). The new technologies were fully biodegradable and met health and environmental regulations and laws.

New technologies are emerging that further address the remaining material compatibility concerns. The wetted parts, meaning the materials that “see” the cleaning agent during operation, are numerous and interestingly, they do vary in quality from manufacturer to manufacturer.

Recently emerged neutral product technologies are fully compatible with anodized aluminum parts, sensitive metals and other previously challenging surfaces. The main difference now is that there is no alkalinity in the cleaning agent, yet the chemistries can still complete the same job as a “defluxing” agent. This unique physical property also comes into play, as users want to minimize equipment wear-and-tear. The wastewater solution is more cost-effective as well.

Perhaps most important, recent cleaning studies showed that pH-neutral products can remove Pb-free, no-clean residues even more effectively than their alkaline counterparts. With these benefits in hand, a new reactivity standard has emerged. Obviously, the classic acid-base reaction is not included in the chemical removal pathway of the residues anymore. With these new products, the physical chemical pathway rests rather on intermolecular interactions. The physical chemist Peter J. W. Debye was the first scientist to study molecular dipoles extensively. Terms such as induced dipole are becoming relevant, as they cause a brief electrostatic attraction between the two molecules. The electron immediately moves to another point and the electrostatic attraction is broken. Additionally dipole-dipole interactions seem to play a pivotal role in neutral defluxing, as permanent dipoles in molecules are created. Often, molecules even can have dipoles within them, but have no overall dipole moment. This occurs if there is a symmetry within the molecule, causing each of the dipoles to cancel each other out. Tetra-chloromethane is one such molecule. In summary, the new chemical removal pathways hinge on electrostatic interactions between cleaning agent and contamination, a phenomenon that will lay the groundwork for future innovations.

In short, in 20 years, we have moved from ozone-depleting products to solvent and alkaline technologies to more modern products that now seem to offer the overall largest process window, even without alkaline constituents. Has any other electronics manufacturing process seen such rapid and positive advancement?

Harald Wack, Ph.D., is president of Zestron (zestron.com); h.wack@zestron.com. His column appears regularly.

OEMs are no longer leaving test strategies in their supply-chains’ hands.

In my role as a technical marketing engineer, I work with test engineers from OEMs, EMS companies and ODMs, learning about their test needs and challenges, which range from common threads to unique needs. One industry commonality is the continuous need to drive down the cost of test. This may be obvious, especially during the recent economic downturn, but even when the market is buoyant, manufacturers continue to expect us to lower test costs, while expecting far greater coverage and test capabilities. When you drill down a little further though, the differences in expectations start to emerge.

While OEMs have traditionally been pioneers when it comes to technology adoption, I have observed that for EMS firms and ODMs, new test technology is a need rather than a choice. While EMS companies do play in the consumer electronics market, many of the bigger players also manufacture more sophisticated products like high-end server, datacom and telecom boards – products that do not face the harsh cost pressure of consumer electronics. There are still cost-down expectations for these products, of course, but EMS firms traditionally have been more willing to invest in newer test technology to overcome the challenge in testing assemblies with technologies such as high-speed differential signals.

That’s not to suggest ODMs are not investing. OEMs are driving their ODM supply chains to adopt new technology to keep up with product requirements such as notebook motherboards with high-speed differential signals, and to cope with PCB real estate demands as the number of nodes increase while the PCB size decreases, making it harder to put testpoints on the board.

We see our key EMS customers involved with manufacturing high-end boards ranging from 7,000 to 10,000 nodes for, say, high-end servers; these are very expensive products, and manufacturers will do everything to achieve maximum test coverage, employing a blend of x-ray to in-circuit and sophisticated functional tests. For ODMs, cost and time-to-market are more pressing issues. While maintaining higher test coverage requirements, ODMs also demand faster test methodologies at even lower costs, with their investments typically centered on a combination of manufacturing defect analyzers, in-circuit tests and functional tests at the board level.

It was observed some years back that OEMs were leaving test strategies more in the hands of their outsource partners. However, I think that trend has petered out, as the pace of technology development puts pressure back on OEMs to drive contractors toward higher standards of tests, to cater for wider and more in-depth test coverage for the onslaught of sophisticated components.

Concurrently, the proactive role played by many OEMs within industry bodies such as IEEE, iNEMI and other initiatives to drive new standards on boundary scan and BIST tests, just to name a couple, will help speed technology adoption.

Within the industry, the role of the outsourced service providers or vendors will grow in depth compared with a few years back. The reason for this is the level of engineering responsibility within the larger manufacturing organizations is rapidly evolving. Many of my production engineer contacts are no longer just engaged in test engineering roles: They wear multiple hats, which include coordinating requirements with customers, managing yield, managing system maintenance, and working with test system application engineers, as well as test program and fixture vendors.

Production engineers no longer have the luxury of time to work on deeper-level debugging and test development. However, another new equilibrium is taking shape, with the workload flowing to more test solution providers and fixture vendors – themselves a growing new breed of technopreneurs, if I may leverage this term to describe engineers-turned-small business owners who still operate within their domain knowledge.

The fallout from the recent economic downturn created new opportunities. In China, there is a well-known term for this called wei ji. The phrase means “crisis,” but the individual characters stand for “danger” and “opportunity.” Many of the technopreneurs who lost jobs during the downturn now find themselves fitting a timely and niche market to provide services back into the supply chain from which they came, albeit now with more flexibility and scalability.

For test equipment vendors to be successful, they need a solid network of partners, and technopreneurs help expand and strengthen that network by providing the needed technical knowledge in test development and debugging, as well as cost-effective local responsiveness required in the 24/7 manufacturing environment.

Test equipment vendors also have a growing responsibility – as technology coach and innovator. Not only must vendors continue to innovate in test, but we also must train our internal team well, which consists of the entire network of expert application engineers, field sales engineers and support partners – from fixture vendors to application solutions providers, certainly not forgetting the end-customers, to ensure they are technology-ready. In this respect, one common challenge across the industry is resistance to change whenever a technology is introduced. The groans of many production engineers are almost audible when we try to introduce a technology on top of already numerous responsibilities. Moving forward, training will grow in importance to ensure each area of this EMS-ODM-CEM equilibrium can evolve rapidly to adapt not just to new technology, but to the shorter, sharper economic cycles we have come to expect.

Jun Balangue is technical marketing engineer at Agilent Technologies (agilent.com); jun_balangue@agilent.com.

Microsectioning shouldn’t be taken lightly, lest the sample be damaged.

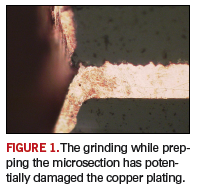

The microsection image shows an open connection between the through-hole copper plating and the innerlayer tracking. The microsection has been poorly prepared, with potential damage to the plating during grinding. As shown, no resin is in the hole to support the through-hole copper plating. The surface of the copper is rounded, making it impossible to prove the root cause of the problem in this example.

It is accepted that the sample has been poorly prepared. Any time a section is being prepared, it is important resin enters the through-hole. In the case of via holes, they may be coated with solder mask; in this case, the mask surface must be broken to permit resin to enter the hole. If a section is found to not have resin in it prior to grinding to the center, it’s important to stop grinding. This permits the hole to be filled with resin, recurred prior to further grinding of the sample.

These are typical defects shown in the National Physical Laboratory’s interactive assembly and soldering defects database. The database (http://defectsdatabase.npl.co.uk), available to all Circuits Assembly readers, allows engineers to search and view countless defects and solutions, or to submit defects online.

Dr. Davide Di Maio is with the National Physical Laboratory Industry and Innovation division (npl.co.uk); defectsdatabase@npl.co.uk.

Sizzling sounds from the wave are a giveaway for this process indicator.

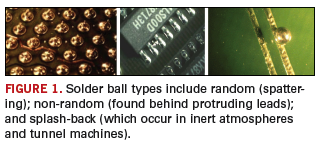

Solder balls are defined as a small sphere of solder adhering to a laminate, resist, or conductor surface. This generally occurs after wave or reflow soldering. The types include random, or spattering type; non-random, which are found behind the protruding leads; and splash-back, which are solder balls from fully inerted and tunnel machines.

Random solder balls are easiest to address, as they are process-related. If a “sizzle” can be heard while the board is going over the wave, the preheat is too low or the vehicle is not fully evaporated. Other items to check are 1) the solder wave; if uneven, clean the solder nozzle assembly and check for parallelism; 2) flux contamination; if contaminated, it needs to be replaced, and 3) the pallet design, which should have vents to permit outgassing.

Non-random solder balls are found on the bottom side of the board, over many boards, usually to the trailing side of the protruding lead. They occur when the flux applied is insufficient or burns off too soon in the wave. Also, the conveyor speed may be too high.

Splash-back solder balls occur when the wave height is set too high or hot, or the air knife is set incorrectly; if there is excess turbulence in the wave, or there is increased surface tension due to nitrogen.

In the event of solder balls, the primary process setup areas to check:

- Conveyor speed too slow.

- Too much time over the preheater, causing the flux to burn off too quickly.

- Dwell time too long, causing the flux to be destroyed before exiting.

- Topside board temp too low.

- Conveyor speed too fast.

- Time over the preheat is not long enough to dry off the flux carrier.

- Not enough flux, or the flux is not active enough.

- Nitrogen use may increase solder ball occurrence.

- Flux carrier not being completely dried by the preheater.

- Water-based fluxes should use forced air convection preheat.

- Too much flux has been applied.

Other things to look for in the process:

- Solder temperature too high.

- Preheat too low.

- Insufficient flux blow-off.

- Solder wave height high.

- Contaminated flux.

- Conveyor speed high.

- Solder wave uneven.

- Flux-specific gravity too low.

- Defective fixture.

- Other things to look for:

- Board contamination.

- Moisture in laminate.

- Laminate not fully cured.

- Defective mask material.

- Poor plating in the hole.

- Poor pallet design.

Paul Lotosky is global director - customer technical support at Cookson Electronics (cooksonelectronics.com) plotosky@cooksonelectronics.com.

Precautions should be taken to prevent cross-contamination of disparate materials.

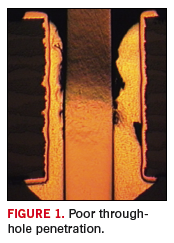

As part of a changeover from SnPb assembly to Pb-free, an electronics manufacturer chose SnAg0.3Cu0.7 (SACX) alloy as a replacement. Almost immediately came the headaches – problems that included pad lifting and poor through-hole penetration. The boards were coated with SnCuNi surface finish and had a thickness of 0.062˝. The components were through-hole with SnPb surface finish and pin-to-hole (aspect) ratios of 0.48 to 0.54.

Three components per board were chosen to be cross-sectioned. The cross-sections were inspected using a microscope and a scanning electron microscope (SEM). Figure 1 shows poor through-hole penetration of one of the components. Lack of wetting was observed on the component lead, resulting in lack of soldering inside the barrel. This problem was observed with several components subsequently examined.

Due to lack of virgin material for testing, it was suggested the root cause of this problem might possibly be poor plating of the component leads, which did not help the solder to wick up and fill the barrel. It was also considered possible that the flux was burning off during preheating and was therefore not active during soldering. Without flux during soldering, oxidation is produced, which impedes wetting.

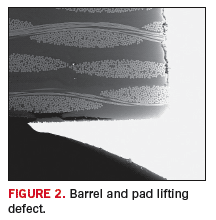

The second problem was pad lifting. SEM analysis of solder joints showed several instances not only of pad lifting, but also barrel lifting (Figure 2). During soldering, the board when heated expands in the Z-direction, and contracts during cooling. Therefore, pad and barrel lifting is an effect and not a defect in which the solder pad/barrel lifts from the board as it cools. The joint formation stress is incremented by the mismatch of the coefficient of thermal expansion of the materials used. In this case, the presence of lead on the component leads migrates to the joint and forms a secondary alloy that has a lower melting point, resulting in a CTE mismatch with the Pb-free alloy. Nevertheless, none of these problems is considered a defect. IPC-A-610D states pad lifting is considered a defect for Classes 1, 2 and 3 when the separation between land and laminate surface is greater than one pad thickness, and IPC-A-600G states resin recession is acceptable for all classes after thermal stress, unless specified by customer.

The copper barrel consists of a thin electroless copper deposited to the drilled laminate, and a codeposit of electrolytic copper plating. The strength of the copper barrel adhesion is determined by the quality of the drilled hole and electroless copper deposit, and the thickness of the electrolytic copper, which should be at least 0.001˝.

Pb-free alloys interact with other materials in occasionally negative ways. Precautions should be taken to prevent cross-contamination of Pb-bearing and Pb-free materials, well outside of the RoHS compliance issue, because in fact, it might become a manufacturing and product reliability issue.

It is also important to optimize the wave profile every time there is change in materials. General recommendations for the improvement of through-hole penetration include: Increase the solder temperature; use a stronger flux, or use a “smart” wave. To reduce pad lifting, avoid low-melting solder contamination, optimize material selection, or keep solder temperature at its lowest practical set point. If possible, higher Tg and lower CTE laminates should be used.

Ursula Marquez de Tino, Ph.D. is a process and research engineer at Vitronics-Soltec, based in the Unovis SMT Lab (vitronics-soltec.com); umarquez@vsww.com. Her column appears monthly.