Components with Sn99 or other whisker-prone finishes can now be rapidly de-taped, tinned, cleaned and re-taped.

Component finishes made up primarily of Sn99, with the remaining alloys being silver, nickel, copper, germanium or some combination thereof, have been known to result in tin whisker growth, increased tombstoning of smaller chip capacitors and resistors, reduced shelf life of components (a reduction in solderability over time) due to Sn99’s higher oxidation rates versus leaded finishes, and other issues.

While certain high-reliability products are exempt from RoHS and similar directives, all are ultimately affected as a result of component vendors’ desire to provide a common Sn99 finish. It is not economical for component suppliers to provide the same components in both Sn63 and Sn99 finishes. Gold as a final finish is seeing increased use as a protective coating to prevent oxidation, but must be removed in high-reliability soldering applications. As more components come only with gold finish, the need for rapidly de-taping, tinning to remove the gold using a dynamic wave, and then re-taping becomes more pressing.

Tin whiskers increasingly are problematic. Some studies detail the probability of tin whisker bridging.1 While high-reliability military, aerospace, avionics, traffic control, medical and industrial equipment are exempt from the RoHS Directive (and for good reason), all need to incorporate some type of whisker mitigation method to disposition Sn99-finished parts. Industry standards for high-reliability electronics specifically require whisker mitigation methods (tinning with at least 3% lead) be used for any components with a Pb-free finish, and the tinning must cover 100% of the component lead, not just the part to be soldered.2 Any gold on the component leads, no matter the thickness, shall be removed to prevent gold embrittlement. To achieve these objectives, many rely on some sort of tinning and component repackaging service, either performed in-house or by an outside provider. This traditionally has been a manual process due to the wide variety of component types and requirements, but increasing volumes and process variability are quickly rendering manual tinning costly and impractical. Here are some of the reasons:

- To prevent tin whisker growth, the entire portion of the component lead or termination must be coated, either with a SnPb alloy, or some other stress-mitigating alloy. The tinning solder must contain at least a 3% Pb alloy to be effective as a whisker mitigation method.

- At the same time, the molten solder should never make direct contact with the component body for more than two sec. to prevent damage to internal connections or lead-frames, which may lead to reduced reliability.3

- To apply solder coating over the entire component lead or termination right up to the component body without damaging the component, the immersion dwell time and depth must be precisely controlled so as to permit solder to wick up the last 0.003˝-0.005˝ of the termination, thereby preventing thermal shock damage to the component and leaving no portion of the Sn99 finish exposed. This requires a computer-controlled automated system. In addition, the tinning flux must be of the type that will remain on the component lead all through the tinning cycle to ensure good wetting.

- The solder level must be precisely controlled, such that an automated system can be fully utilized. Attempting to manually fill solder to a precise level within a pot, or to manually dip a component with the required precision and hold it for a dwell time measured in milliseconds, is humanly impossible.

As mentioned, oxidation issues with older Sn99-finshed components are just beginning to materialize. Because pure tin oxidizes much more rapidly than a lead-containing alloy, Sn99 parts have a shorter shelf life before wetting defects begin to add to the DPMO of any soldering process. In addition to the simple non-wetting or poor wetting defects, tombstoning can become a major issue when using oxidized Sn99 chip capacitors and resistors because either end may not wet as readily as the other, and this imbalanced wetting action is what causes the component to be pulled toward one pad or the other.

For those companies required to meet the Pb-free portion of the RoHS restrictions, tinning with SnPb37 solder to prevent whisker growth is not an option.

However, alloys such as Kester’s K100DL or Nihon Superior’s SN100C4 are doped with trace amounts of other alloys that may help inhibit tin whisker growth. These alloys can be used to tin components within the robotic tinning cell and will renew the component solderability of oxidized Sn99 components as well.

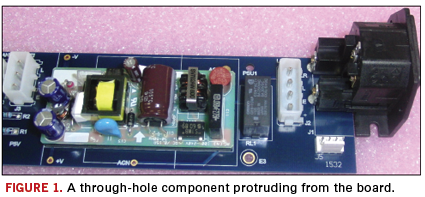

For these reasons, General Dynamic’s Advanced Information Systems group approached V-Tek to build a robotic tinning cell using a precise component handling system (Figure 1).

The robotic tinning platform performs the following steps:

- An Epson robot picks the component from either a standard eight to 45 mm tape fed into the de-taper, which is any feeder for most pick-and-place machines, or any JEDEC matrix tray. After processing the parts, a Hover-Davis re-taper is used to re-tape the parts. This TM-50 unit is integrated into the robotic platform and controlled by the robot software. Matrix tray locations are taught into the robot software (Figures 2 and 3).

- The V-Tek robot is set up to use Mydata nozzles or those from other common pick-and-place machines.

- The robot then performs a process control inspection of the previously formed component leads using a Coherix 3-D camera system to inspect for bent or abnormal component leads, lead coplanarity, toe-to-toe span and heel-to-heel span to within 0.001˝. Rejects are placed in a reject tray for disposition or rework.

- After 3-D inspection, the robot applies a halide-free water-soluble flux specially formulated for automated tinning. This flux prevents bridging on fine-pitch leaded components.

- Next, the robot dips the component into a nitrogen-blanketed dynamic (flowing) solder pot (Figure 4). Immersion can be done within 0.001˝ z-axis accuracy for programmed increments of time down to 1 ms, and with the entire spectrum of component package styles such as SOICs, QFPs, SOT-X, connectors, and leadless chip capacitors and resistors down to 0402 size. The part can be dipped at virtually any angle, depth and dwell.

- After the tinning step is completed, the robot precisely holds the component into a hot deionized water wash system that is an integral part of the platform (Figure 5), and then presents the component to an air knife system for drying.

- The robot then brings the tinned and cleaned component over a second Coherix 2-D inspection system (Figure 6) to verify there are no solder bridges, icicles or excess solder, insufficient solder coating, or other solder defects (Figure 7).

- Next, the robot places the finished component back into a new tape or matrix tray or reject tray for disposition/rework (Figure 8). The tray position is programmable and can be taught to the machine.

- As previously described, de-taping of components is performed using pick/place feeders clamped onto the robotic platform, and the robotic software controls sequencing. Feeders from most major pick-and-place machines can be used.

The system can handle small quantities of components quickly and efficiently to meet the needs of flexibility when running small lots. In addition, the robotic tinning cell can be used for many other applications. One example is to use the robot to dip BGAs into the solder pot to a precise depth to remove the old solder balls as part of a BGA rework/reballing process.

Acknowledgments

The authors would like to thank Mark Jensen and George Andreadakis of V-Tek, and Dan Volenec and Mike Soltys of General Dynamics AIS, for their long and hard work in the development, debugging, programming and qualification of the Robotic Tinning machine.

References

1. S. McCormack and S. Meschter, “Probabilistic Assessment of Component Lead-To-Lead Tin Whisker Bridging,” International Conference on Soldering and Reliability, May 2009.

2. J-STD-001DS, “Space Applications Electronic Hardware Addendum to J-STD-001D Requirements for Soldered Electrical and Electronic Assemblies,” September 2009.

3. Shirsho Sengupta and Michael G. Pecht, “Effects of Solder-Dipping as a Termination Re-finishing Technique,” doctoral thesis, August 2006.

4. Keith Sweatman, personal communications.

Richard Stadem is a principal process engineer at General Dynamics Advanced Information Systems (gd.com); richard.stadem@gd-ais.com. Cornel Cristea is director of engineering at V-TEK Inc. (vtekusa.com).

Positioning CSPs and noise-sensitive devices are two of a host of considerations.

Effective, accurate component placement takes into account the best practices of design and assembly, both of them inextricably intertwined. The layout engineer must take special care to correctly place components on the board, since this has a direct bearing on assembly and testability.

Also, with the evolution of various technologies, assembly takes on newer meanings, dimensions, and demands. In particular, use of fine-pitch BGAs is escalating, challenging current assembly practices and procedures. In these situations, PCB design and assembly know-how and experience are the linchpins for effective placement.

Design for assembly takes into account such factors as through-hole versus surface mount components, critical ribbon and cable assemblies, cutouts, vias, decoupling capacitor placement, mechanical aspects, and others. Through-hole technology has become more of a specialty, while surface mount is the basis for most of today’s designs.

In terms of placement and routing, the beauty of an SMT design is its superior leverage relative to board’s real estate area, because both sides of a board can be utilized. This creates certain challenges, however. For instance, an experienced PCB designer doesn’t want to place a noise-generating device or high–frequency, sensitive device close to the clock where the signal will incur interference. Improper component placement raises the probability of high-level noise and an unacceptable signal-to-noise (SNR) ratio.

Through-hole mostly is used for connectors and mostly is limited to signals coming into and exiting the PCB. However, SMT connectors increasingly are used. But some applications employ a combination of surface mount and PTH components. When a PTH connector is used, another component cannot be placed on the other side of the board since there’s no usable real estate.

The advantage of a surface mount connector is both sides can be used for component placement. However, the PCB designer must take precautions when placing either PTH or SMT I/O components. The same concepts apply to other components with gold fingers, ribbons, or cables attached to the board. Usually, the physical location of these devices is fixed. For example, a gold finger of a daughtercard going into a motherboard needs to be at a certain location to ensure a proper fit. At the same time, the board designer must ensure space limitations are not violated and no mechanical, vibration, or height-related issues are created due to that particular placement.

The designer also must check that all X and Y coordinate calculations are accurate. This means the cutout or gold finger is made at the right location because if a hole is off by, say, 0.010˝ to 0.020˝, then a pre-selected cable will not go in the right place. Physical location and dimension calculations cannot be fudged if I/O components are to precisely communicate with fixed devices from other systems or subsystems to the PCB.

As for vias, there are an array of types, including blind, buried, stacked, and through-hole vias. A through-hole via goes from one side of the board to the other. Blind vias go from an outerlayer to a middle layer; for example, a blind via originates at the top layer and terminates at one of the innerlayers. Buried vias originate and terminate within innerlayers. Stacked vias originate from each of the outerlayers and terminate on the innerlayers at the identical location.

When it comes to selecting vias for accurate component placement, the designer must consider the pros and cons of each to avoid assembly issues later. For example, for smaller and handheld devices, which are compact in terms of available real estate, there is sometimes no choice but to use blind or buried vias. These particular vias are difficult to fabricate and increase manufacturing cost. The advantage of these vias is that routing and connections use the innerlayers, thereby saving top and bottom layers for component placement and critical routing.

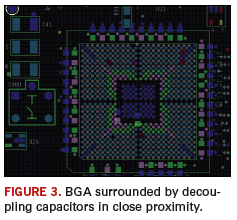

Signal integrity is another major DfA aspect when it comes to component placement. It is critical to appropriately place bypass capacitors next to BGAs, CSPs or QFNs. This is especially true in high-speed designs, for which transmit and receive paths must be highly accurate and extremely short, to avoid impedance control issues. The signal from a BGA ball to a bypass capacitor must be cleanly filtered to suppress noises, hence the importance of maintaining close proximity and placement of these capacitors next to BGA, CSPs and QFNs.

If the board has too many components close to the edge and protruding from the board (Figure 1), it’s best to use tab and route, versus scoring during board fabrication. By using tab and route, there is a small portion of rail to separate two boards from each other. On the other hand, a scoring device separates boards next to each other and is not ideally suited for boards with protruding devices.

Also, for efficient assembly involving small board sizes that are panelized, create accurate fixtures for handling PCBs during reflow soldering. But a panel must not be made too large; optimal panel size must be calculated to maximize manufacturing efficiencies. Otherwise, the board could warp from the component weight.

Aside from proper assembly techniques, DfA compliance also deals with different tools and the point to deploy them during assembly. For instance, will x-ray be applied as a process check at the end of each board or for sampling one of every five, 10, or 20 boards? When will a certain fixture be used? During surface mount? Wave solder? Or maybe at AOI or testing? These issues need to be addressed at the planning stage, when the CAM engineering/planning department is reviewing the data and preparing the processes and visual aids for the jobs.

Not all machines will be able to use the same fixture, due to varying dimensions. But they will be different because they’re created to conform to different board sizes. Moreover, they have their own mechanical and tolerance limitations. Be aware of these tolerances, as a universal fixture is not a cure-all. At times, there must be multiple fixtures, one for SMT (Figure 2), another for wave solder, and still another for testing. Most often this occurs when boards are smaller and not uniform. For example, they may be semi-circular, round, half moon or L-shaped. In these cases, various types of fixtures are used to make assembly an easy, reliable and repeatable process.

As far as BGAs are concerned, they demand special scrutiny and attention. BGAs require just the right amount of solder paste. Too much could cause shorts between the balls. Then there’s no choice except to depopulate and re-ball it, or use a new BGA device. Moreover, it’s important to ensure correct placement, keeping in mind component orientations and polarities. When rework is involved, sometimes it is important to desolder the nearby decoupling capacitors (Figure 3) or other devices, making rework possible.

Also, BGAs should never be placed close to the board’s edge. Reason: A BGA needs a peripheral area that’s heated during rework. If close to the edge, then that half side close to the edge will not sufficiently heat, simply because there’s no mass around the BGA device to heat. Hence, the BGA will be extremely difficult to depopulate.

At the stencil design stage, sound assembly practices depend on the decisions over foil thickness, stencil frames, aperture to pad ratio, for dispensing correct amounts of solder paste, when to use window panes, performing first article inspection, and the right type of solder paste, along with use of paste height measurement systems.

Foil thickness determines the amount of paste to be dispensed. Assembly personnel must determine whether a stencil will be 0.04˝, 0.06˝ or 0.08˝ thick to gauge the amount of paste required. As foil thickness changes, it changes the amount of paste dispensed on pads, thereby affecting the thermal profile.

Foil should come framed from a vendor. Stencil foils without frames create time and reliability issues. Time-consuming mistakes may include mounting the foil upside down or in reverse, and damaging it. Also, when stencil foils are not stretched properly when mounted on the adapter, it can adversely affect the quality of the paste deposit, causing bridging and misregistration on the SMT pads.

Aperture-to-pad ratio decisions, for example, can be whether those ratios will be 1:1, 1.1:1 or 0.9:1. The ratio 1:1 means size of the stencil opening is equal to the SMT pad. A 0.9:1 ratio means the size of the stencil opening is smaller than the SMT pad, thereby dispensing smaller amounts of paste. This mostly is used in ultra-fine-pitch SMT applications.

Sometimes a stencil opening is made a bit larger compared to the pad size so that the aperture to pad ratio becomes 1.1:1. This permits slightly more paste to be dispensed on the stencil. This is mostly used in heavy analog applications. In each case, assembly personnel must be fully aware of a board’s end application and whether the dispensed solder paste is adequate. Some studies have found as much as 75% of defects are related to paste dispensing, which bears directly on stencil design.

Window panes are implemented when there is a huge opening on a stencil design. Consider a 0.5˝ x 0.25˝ opening, which is extremely large when it comes to dispensing paste. Therefore, a decision is made to pane the window to create smaller window or opening sizes within the large opening. For example, six different slots or panes may be created to control the amount of paste going to these different panes versus applying a whole blob of paste on the pad.

Also, every time a decision is made involving stencil design, it’s vital to perform a first-article check to ensure the proper amount of paste is dispensed, or whether another foil needs to be cut due to a thickness or aperture-to-pad ratio change. This would act as a process verification tool for dispensing the right amount of paste on the pads.

Here’s where a first-article inspection system proves invaluable. FAI systems are relatively new. They help create the first-article board by scanning the image of the whole board (the golden board), and comparing the images of all other boards with this golden board to ensure all components are placed properly, with correct orientation and polarities. It is used as a process verification and inspection tool to significantly reduce the human interface and make inspection and QC more reliable, repeatable, and faster by at least 30 to 50%.

Last, solder paste and its varieties are vital considerations for accurate component placement. Normally, Type 2 and 3 solder pastes are used for analog devices and analog/digital. Sometimes Type 4 (or finer) is required for fine-pitch BGAs, CSPs, and QFNs.

Generally speaking, solder paste is a mix of metal grains and certain chemicals in a format conducive for soldering. Those metals are of considerably finer grain in Type 4 compared to Type 3. This means Type 4 can be used more accurately for fine-pitch components because its tolerance is slightly higher compared to Types 2 and 3. Viscosity for Type 4 is finer. This means grain particles are joined tighter together compared to those of Type 3, and thus can be used more accurately for fine-pitch components.

Zulki Khan is president and founder of Nexlogic Technologies (nexlogic.com); zk@nexlogic.com.

Fredrick Grover’s invaluable tome is essential for partial inductance calculations.

Inductance is probably the most confusing topic in signal integrity, yet one of the most important. It plays a significant role in reflection noise, ground bounce, PDN noise and EMI. Fortunately, the definitive book on inductance, originally published in 1946 by Fredrick Grover, is available again.

After two printings in the 1940s, Grover’s Inductance Calculations was out of print by the mid 1980s. It was just reprinted this year and is available from Amazon in paperback (amazon.com/Inductance-Calculations-Dover-Books-Engineering/dp/0486474402/ref=sr_1_1?ie=UTF8&s=books&qid=1260191720&sr=8-1) for a very low price.

Motors, generators and RF components experienced a period of high growth in the 1940s. At their core were inductors, and being able to calculate their self- and mutual-inductance using pencil and paper was critical. (Keep in mind this predated the use of electronic calculators.) While many coil geometries had empirical formulas specific to their special conditions, Grover took on the task of developing a framework of calculations that could be applied to all general shapes and sizes of coils.

While Grover does not explicitly use the term, what he calculates in his book are really partial inductances, rather than loop inductances. A raging debate in the industry today is about the value of this concept. Proponents say it dramatically simplifies solving real-world problems and is perfectly valid as a mathematical construct. You just have to be careful translating partial inductances into loop inductances when applying the concept to calculate induced voltages. Opponents say there is no such thing as partial inductance; it’s all about loop inductance, and if you can’t measure it, you should not use the concept. There is too much danger of misapplying the term.

I personally am a big fan of partial inductance, and use it extensively in my book, Signal and Power Integrity – Simplified. It eases understanding the concepts of inductance, and highlights the three physical design terms that reduce the loop inductance of a signal-return path: wider conductors, shorter conductors, and bringing the signal and return conductors closer together. Most important, partial inductance is a powerful concept to aid in calculating inductance for arbitrary shaped conductors.

Inductance is fundamentally the number of rings of magnetic field lines around a conductor, per amp of current through it. In this respect, it is a measure of the efficiency for which a conductor will generate rings of magnetic field lines. To calculate the inductance of a conductor, it is a matter of counting the number of rings of field lines and dividing this by the current through the conductor. Counting all the rings surrounding a conductor is really performing an integral of the magnetic field density on one side of the conductor.

Literally everything about the electrical effects of interconnects stems from Maxwell’s equations. Grover starts from the basic Biot-Savart Law, which comes from Ampere’s Law and Gauss’s Law, each, one of Maxwell’s equations, and derives all his approximations. The Biot-Savart Law describes the magnetic field at a point in space from a tiny current element.

Using this approach, Grover is able to calculate the magnetic field distribution around a wide variety of conductor geometries and integrate the field (count the field lines) to get the total number of rings per amp of current. Using clever techniques of calculus, he is able to derive analytical approximations for many of these geometries.

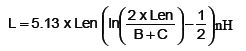

The most commonly used approximation is for the partial self-inductance of a long, straight rectangular conductor, such as a lead frame in a QFN package or a connector pin. He calculates it as:

where

L = the partial self-inductance in nH

B, C are the thickness and width of the conductor cross section in inches

Len = the length of the conductor in inches.

For example, for a 1˝ long lead, 0.003˝ thick and 0.010˝ wide, the partial self-inductance is 23 nH. This is roughly 25 nH per inch, or 1 nH/mm, which is a common rule of thumb for the partial self-inductance of a wire.

If you deal with connectors, packages, vias, board discontinuities or odd-shaped transmission lines, and need to estimate the loop inductances of non-uniform sections, Grover’s book is a great resource. You will have a great collection of inductance approximations at your fingertips. It is well worth the low price.

Dr. Eric Bogatin is a signal integrity evangelist with Bogatin Enterprises, and has authored six books on signal integrity and interconnect design, including Signal and Power Integrity – Simplified, published in 2009.

Time and technology passes, but the three drivers of change remain static.

It really wasn’t until a couple of weeks ago that it hit me: We are no longer in the first decade of the “new millennium.” Remember Y2K? Well, that was 10 years ago – eons in the world of electronic technology.

Scarier still is looking back at what the pundits were predicting on the eve of Y2K. Some saw gloom and doom for the modern world because of old analog clocks, unable to handle moving from “19” to “20,” therefore shutting down our modern utility-driven world. Others saw the fortunes of Wall Street moving ever onward and upward with another 250% gain for the decade. Some in our industry saw the size, profitability and clout of the North American printed circuit board industry leading the world, while other global areas continued to try to catch up. Let’s see: wrong, very wrong and sorta wrong. So much for pundits.

As much as some things have changed, the drivers of that change remain amazingly consistent with the drivers of all changes since anyone can remember or history has documented. No matter how tantalizing or technologically sophisticated the future appears to be, basics still govern. Over the years, when I have asked the “older and wiser’ among us – those who have helped us get to where we are today – the three drivers cited have been the same as those mentioned by young up-and-coming technologists, those leading us into the future. As I try to adjust to the second decade of the new millennium, I find myself considering how ageless and powerful these can be.

Curiosity is the driver that makes us ask questions. Without asking questions, such as how does this work, or what can I do to improve something, momentum never changes. In our industry, designers are perhaps the best example of those who always ask questions and consider the corresponding thoughts of “what if?” But curiosity is not limited to those who design product. Many a sale has been made by those curious about what other companies might need. Equally, in manufacturing no improvement can take place without someone asking how a process can be improved.

Ideas includes devising answers to the questions that curiosity causes. Initiating change comes not from accepting the status quo, but from developing ideas about what to do next. Again, designers do this all the time and seemingly effortlessly with tremendous results. Salespeople also come up with ideas that transform an unapproachable prospect into a loyal customer. Ideas transform the status quo into the “what if?”

Effort is the catalyst that enables curiosity to lead to an idea and that idea to be transformed into a result. Effort requires far less imagination or intelligence, but without it, nothing will result. Effort is the sweat it takes to work through the details and turn an idea into a satisfactory solution to the question initiated by curiosity.

Which brings me back to the pundits and their predictions. Despite those pesky analog clocks, the world did not end, because someone became curious and determined there was a problem. Then someone came up with ideas that might resolve the problem. We all took part in the effort to replace the disruptive clocks and controllers with digital versions, thus averting the Y2K gloom and doom.

Wall Street, on the other hand, was far less curious, had no idea, and therefore made no effort. By assuming something might happen and being comfortable with the status quo, just about every metric this past decade has been lost insofar as asset growth and wealth generation. Most investors have at best tread water, in large part because of complacency and an aversion to taking advantage of drivers that cause change.

Closer to home, our industry has experienced much change – some good, some not. Technology has marched forward because people remain curious, come up with great ideas and make the effort to convert ideas to cutting-edge product. Make no mistake, it is not software that enables technological advances, but rather the people who continually ask questions and challenge the norms – and then do something about it.

But for some, it has been a different story. Too many became complacent at the end of the 1990s. The curiosity to imagine what might be, and consider how best to make it happen, seemed to fade into the status quo. For others, many in far-flung parts of the world, curiosity was rampant. Ideas flowed like water. The effort was made to harness those ideas to satisfy the curiosity, and in 10 short years the epicenter of much of the technological world has shifted to places some thought unimaginable.

One of the many lessons of the past decade we all can apply to this new one is that if you become too complacent, the world will pass you by and in the worst way. Equally, if you stick to your knitting and be proactively curious, creatively developing new ideas and making the effort to take full advantage of that curiosity and thought, life can be good. The trick is making sure that everyone – from the top of the organization through all lower levels – doesn’t become complacent, but instead keeps thinking “what if?” That’s what good leadership inspires.

We in the technology world need to remember that every day offers us (and our competition) time for dynamic change to take place. Those who are getting ahead may be doing so by simply being curious, encouraging ideas and then making the effort – as daunting as it may seem at times. The challenge – and opportunity – is to remember to be curious, to have ideas, and to make the effort.

Peter Bigelow is president and CEO of IMI (imipcb.com); pbigelow@imipcb.com. His column appears monthly.

One year into the job, Assembléon CEO André Papoular is still up for the challenge.

In January 2009, after nearly 29 years with Philips, André Papoular was named chief executive of placement OEM Assembléon. Armed with engineering degrees in electromechanics, power electronics and automation, and experience in marketing, divestitures, and business management across two continents and three business units (electronics automation, consumer electronics, semiconductors), one could say this is the role he’s been preparing for his entire career. At Productronica in November, fresh off an announcement that it would team to install Valor’s performance monitoring and assembly traceability software on its placement machines, Papoular and senior director of marketing Jeroen de Groot spoke with Circuits Assembly.

CA: If you knew what the business environment was going to be like in 2009, would you have taken the job?

AP: [laughs] I spent 29 years at Philips, in marketing, divestitures, and finally, business management. I’ve worked on the electronics side, consumer electronics, semiconductors, in Asia and Europe. I think I knew what the job was, and that’s why I took it. I looked at the plan the first day and challenged it, because I knew it would not be [the way it turned out]. It’s been very enjoyable; the team has been supportive through the crisis.

CA: How did the deal with Valor come about?

AP: When the downturn hit, we did not blame the past. We faced the reality of today: What are the signals we could see and opportunities we could grab? There are not too many opportunities for vertical integration anymore. It is much more efficient to look for partnerships.

CA: How complex is it to be an OEM and a major distributor?

AP: The [customer’s] choice is not made by brand. We look at the width of our portfolio and how to select the best set for our customers.

CA: Has the economy affected the transactional nature of your business?

AP: For payment terms, because of some uncertainties, some companies needed to negotiate with us first. We had to revise means of payments to ensure [we would be paid]. We were scrutinized by suppliers as well. We will end 2009 with just a small amount of bad debt. We paid attention and had lots of discussions about payment terms.

CA: What effect, if any, did [CyberOptics’ chairman] Steve Case’s death have on your partnership?

AP: It was a sad event. He had a personal relationship with many people at Assembléon for many years. CyberOptics is a proficient company and has done its best to ensure continuity. We have compliments to [chairman and CEO] Kitty [Iverson] and her team.

CA: Has SMEMA kept up with the industry needs?

JdG: For cph [component placements per hour], IPC-9850 still helps. Once people start talking about accuracy, it helps. In most cases, it remains a good starting reference. It would be relevant to establish a standard for changeover; for example, does it start with the first board through or the last board through?

CA: Will we see full-fledged inspection on the placement machine?

JdG: We have to be clear on what’s being measured. We focus on reducing at the source, at where the errors occur. So we try to make sure our machines make the fewest number of mistakes. Just adding a camera won’t solve that. For the printer, we did a study and found the prime cause of problems was particles of paper from the solder were getting in, and then they burned during reflow.

AP: We go back to the accuracy of the machine. If the machine is doing what it is supposed to, it won’t justify putting AOI after placement.

Offshoring works best for stable designs and predictable demand.

“We need to try to bring the price down some more,” the customer told me over the phone.

It was 2005 and the company I worked for was a custom OEM primarily serving the telecommunications industry. After the telecom collapse, everyone wanted the lowest cost possible, and no one was able to forecast sales beyond three months. Long-term purchase orders that helped with downstream planning were a thing of the past. We already had squeezed the supply chain as much as we could; without longer-term planning, I was unable to get additional volume discounts on material. The customer was a startup, though, and was attempting to hit a price point that it was sure would sell decent volume. We were looking at around 2,000 pieces per year, which for us was high volume.

After management review, we decided to attempt to outsource the board for cost savings. I had some experience with Chinese manufacturing, and our company was in the process of setting up an office in India with an eye toward offshoring certain engineering operations and assembly. The thinking was, by having our own office in a low-cost country, we would be able to better manage the offshore suppliers. I sent RFQs to China and to our Indian office. Even at the lower volume, I was able to find cost savings: 35% in China and 25% in India. For various reasons, the Indian supplier was selected, and the customer was happy with the cost savings. Now it fell to me to manage the supplier.

I had heard the horror stories associated with offshore sourcing, mostly related to product quality. I had one customer that set up a closed-circuit camera in one of its offshore partners’ facilities. When boards were completed, the manufacturer (in India) placed the finished product on a table under the camera. The US customer logged into the camera and inspected the boards remotely before permitting shipments. This situation seemed overly involved to me, and was one I was determined to avoid. Worldwide shipping services had largely eliminated cost concerns I had previously heard about in early offshoring discussions. I no longer needed to wait for a container to be full to get product shipped at an acceptable cost. Sure, it was more expensive than my local supplier delivering the boards, but that cost adder had been factored in my sell price to my customer. I also had been warned of language barriers and time zone delays as potential issues. I found the majority of my contacts in India spoke English nearly as well as I did – accented English, to be sure, but still very understandable. The Indian companies we did business with had also set their mid- and upper-level management personnel on second shift. This allowed them to work with our normal first-shift hours in the Eastern time zone. We also had local representatives who could be sent to the manufacturing facility to oversee our needs.

Initially, the relationship worked rather well, despite my reservations and fears. As time elapsed, however, we began to run into issues, primarily with on-time delivery. The supplier was unable to efficiently adjust to our needs, based on either material availability in country, or assembly capacity. We needed releases of 200 pieces, but they would run 500 at a time. Units would fail test, and instead of asking for help troubleshooting, they would push them aside in favor of other customer demands. We’d make engineering changes, and they would neglect to update their assembly documentation, or make us take previous revision boards due to their overruns. We had never discussed minimum lot quantities, nor were they a condition of the contract. The supplier also purchased material at much higher quantities than needed for the orders we had placed, and then asked us to cover the material overage costs, another item that was not a condition of its quote, the contract or our purchase order.

Most issues we encountered were similar to issues encountered with local suppliers, but were aggravated by the distance. I was unable to effectively manage the issues with the time zones involved while maintaining adequate lead times. The stateside overhead required to manage the product was not factored into a landed cost analysis of the product, and quickly absorbed any cost savings. In the end, we were forced to bring the product back onshore so that it could be efficiently produced and managed.

As with all outsource evolutions, planning is essential to successful offshoring. If the design is not stable, or demand not relatively predictable, the odds of success decrease. Stateside management costs must be factored into the landed costs, more so with volatile demand products. I believe offshoring could be an effective solution for the right product and volume mix. However, offshoring is not a viable option when demand is variable and the design is not stable. Stick with a flexible onshore supplier for these sorts of products.

Rob Duval is general manager of SPIN PCB (spinpcb.com); rob@spinpcb.com.