Macroeconomic cycles are real, but are they reliable indicators of EMS growth?

Perhaps the industry’s most frustrating aspect is its cyclical nature. Equipment manufacturers and OEMs cannot escape the desperate ups and downs. While there are natural cycles of consumption and excess, they usually are the result of consumer spending variations that thread through the supply chain and create havoc with the supplier base.

Worst hit are equipment suppliers, whipped by the combination of end-user demand and OEM whims concerning predicted fulfillment. The chain reaction of supply makes this boom/bust scenario chaotic and excessive in terms of forecasting revenue. It is often an accumulative effect in which the tail of the chain – the equipment/manufacturing guys – gets whacked most, while distributors and EMS suppliers are left with excess or deficient inventory.

This is all specific to product industries – PCs, notebooks, LCD monitors/TVs, set-top boxes and video console games, to mention a few. To be sure, OEMs are their own worst enemies – they hedge against upside demand and over-predict against downside supply – to their, and their suppliers’, misfortune. Yet, who cannot claim to be accurate and wise after the fact? As a forecaster, I can honestly say that when we are spot-on, it is sheer luck, and when we are wildly off, we hide until the storm passes.

One hopes time will provide wisdom and sanity. We try to integrate knowledge gained from watching such cycles in our current forecasts. Conservatism seems to pay dividends, yet we have often been wrong. Sometimes we have been astoundingly accurate – shockingly – yet we know that we must attribute it to luck.

A few examples make this point. In 2000, when the market was booming, we precisely predicted EMS market growth, but this was because it was exploding and there seemed no end in sight. When the downturn arrived, it was very embarrassing to revise our forecasts. Similarly, we expected a solid 2009. We lick our wounds and beg for forgiveness and forgetfulness.

Yet, who could know? Cycles exist that seem beyond our awareness. The Foundation for the Study of Cycles (foundationforthestudyofcycles.org) is well aware of this, if you believe in such things as planetary or cosmic/organic cycle studies. It is a fascinating organization, one that examines not just economic cycles, but other systemic movements in nature and life that seem to follow patterns outside our predictive natures. I can’t say that I agree with all its predictions, but I am interested insofar as they help us understand our businesses.

Edward R. Dewey, a Harvard economist and founder of the FSC, made this bold statement: “Cycles are meaningful, and all science that has been developed in the absence of cycle knowledge is inadequate and partial. [A]ny theory of economics, sociology, history, medicine, or climatology that ignores non-chance rhythms is as manifestly incomplete as medicine was before the discovery of germs.”

Cyclic prizes that have been awarded for observing solar and lunar cycles such as El Niño correspond to droughts in Northeastern Brazil, Morocco and the American Southwest, specific markets, economics, and forecast turning points in international business cycles, with some even going so far as to predict the systematic cycles of war, tree ring widths, weather, biophysical science, civil violence, insurance, motivation and yes, corporate lifecycles! It all can become a little esoteric when it comes down to biocybernetics and new option markets, but so it goes.

Back on earth, cycles both exist and (seem to) recur. Electronics seems to be an industry of boom and bust, much to its participants’ consternation. Yet, growth endures and every year we look forward to new gains. In this regard, I can positively predict the EMS and electronics assembly industries and their corollaries will continue to grow, albeit at varying rates. The recession cycles are the most disturbing and unpredictable.

I am not advocating cyclic studies to help us understand the economics of electronics manufacturing; they should be considered in the larger context.

Macroeconomic cycles are real, despite our subtle denial. I wish we were able to harness them better in our work. In the meantime, we will try our best to work with our best knowledge, intellect and intuition to help our clients predict future product demand. Otherwise, suppliers must rely on their own intelligence, and where does that come from? Is it best guess, economic indicators, prognosticators, cyclic studies? We would be silly to promote such a view as cyclic studies; however, it introduces a fascinating dimension to a world that has not been completely proven.

Randall Sherman is president and CEO of New Venture Research Corp. (newventureresearch.com); rsherman@newventureresearch.com. His column runs bimonthly.

We left off last month pontificating on the so-called “conflict metals” – ores mined in the Democratic Republic of Congo, often by child laborers under duress and whose efforts inadvertently underwrite the now decade-long war that has led to the slaughter of millions and devastated that African nation.

Various world bodies, including the US government, are considering legislation to force buyers away from these contested metals. Sounds good on paper, but the issue remains that refined metals are indistinguishable, with no identifying thumbprint. Solder suppliers purchase the metals on exchanges, far too late for any traceability.

Several stakeholders have latched onto to this problem – which is good. There are potential rubs, but the recent discussions suggest progress in the right direction.

To bring readers up to date, on Dec. 9, 13 representatives from various industry trade groups and electronics companies met in Paris to discuss potential outcomes. Among those attending the meeting were representatives of Intel and Motorola. As if to underscore the weight of the matter, per the meeting minutes, the two companies agreed conflict mining is unacceptable, with Intel saying a solution would be necessary within six months.

Via a joint workgroup of the EICC (Electronics Industry Citizenship Coalition) and GeSI (Global e-Sustainability Initiative) – the former a group of large electronics companies, the latter a nonprofit association – Intel, Motorola and their corporate colleagues are collaborating with experts, governmental and non-government bodies, academia, and other supply-chain organizations to “learn more about mineral mining and processing.”

Last seen in these parts dissuading lead use, the International Tin Research Institute last July hatched a three-phase plan (the Tin Supply Chain Initiative, or iTSCi) to ensure due diligence through written documentation by traders and comptoirs. Such evidence would prove legal status, legitimacy, export authority and certificate of origin. ITRI, whose members are the world’s big tin miners and smelters, requires the documentation on every shipment and claims “most players now recognize the need for improvement.”

More critical is Phase 2 of the iTSCi: traceability to the mine. The plan calls for achieving this through a system of unique reference numbers assigned to each parcel of material shipped from the mine site. Information on the reference number would be recorded on paper as minerals are moved. The final document, which records for audit purposes the unique reference numbers issued at the preceding point in the supply chain, is a Comptiors Provenance Certificate, and would become a precondition for any export of mineral to ITRI member smelters.

The process, ITRI says, should ensure any export shipment leaving DRC could be tracked back, via the tag number, to the mine/area where it was produced, and provide confirmation that the mine or source area, transport routes, and all the middlemen in the chain are “clean.” (For more information on the first two phases, see itri.co.uk/pooled/articles/bf_partart/view.asp?q=bf_partart_310250.)

When millions are dying, all solutions must be considered. I like that the industry is taking charge here, as opposed to leaving its fate to governments that might be prone to legislating a “solution” without necessarily understanding (or caring about) the technical and logistical hurdles. I have been a skeptic of the validity of audits, and remain somewhat unconvinced the methodology put forth will be sufficient and uncorrupted. But it’s coming together much better – and faster – than I could have hoped, and all those involved deserve considerable credit.

Virtual world. Early next month, the 3d Annual Virtual PCB trade show takes place. As we went to press, two major CAD software companies, EMA and Altium, had just signed on, as had placement and screen printer equipment OEM Assembléon. Moreover, UP Media signed a deal under which SMTA has been made an exclusive partner, and will support the show with brand new technical Webinars and other programming. We are thrilled with the turnout of the past two shows – a total of 4,800 registrants – and excited at the prospects going forward. Register now at virtual-pcb.com. It’s free, and you never have to leave your desk.

Tweet, tweet. And for the latest news alerts, don’t forget to follow us on Facebook and Twitter (http://www.twitter.com/mikebuetow).

Environmental Compliance

“RoHS vs. REACH: What Does This Mean to IT Hardware Companies and Data Management?”

Authors: Jackie Adams, et al; jackiea@us.ibm.com.

Abstract: When preparing to meet EU REACH requirements for IT electronics hardware, the focus is on tracking, communication and notification of certain substances in articles, while RoHS focuses on eliminating or restricting the use of heavy metals and other substances in homogeneous materials. Because of the larger number of potential chemicals involved (Substances of Very High Concern, SVHC, candidates), and the fact that there are no exemptions applicable to hardware products, the REACH requirements can be a challenging task for companies. With EU REACH, if the stated concentration level of SVHC candidates in articles (e.g., hardware products) is exceeded, producers and importers in the EU are subject to communication and possibly notification requirements. This drives extensive information requests throughout the supply chain. These regulations drive requirements for quantitative and qualitative chemical data and an increased need for the surveillance of emerging regulations as they are being formulated, so that one can design compliance processes that are ahead of what will become firm requirements. This paper outlines data collection options the electronics industry can deploy in connection with their supply chain and with clients to develop material composition information systems necessary to comply with both RoHS and REACH requirements. (SMTAI, October 2009)

Failure Modes

“Head-and-Pillow SMT Failure Modes”

Authors: Dudi Amir, Raiyo Aspandiar, Scott Buttars, Wei Wei Chin and Paramjeet Gill; dudi.amir@intel.com.

Abstract: Head-and-pillow is an SMT non-wetting defect. This defect is hard to detect after SMT assembly and most likely will fail in the field. There are a number of causes for this type of defect. They can be categorized into process issues, material issues, and design-related issues. This paper examines different causes for the head-and-pillow defect and the mechanism behind it. The paper also describes critical factors that affect head-and-pillow, how to identify the root cause, and potential solutions for prevention. (SMTAI, October 2009)

Laminates

“Conductive Anodic Filament Study: Laminates and Pb-Free Processing”

Author: Randal L. Ternes; randal.l.ternes@boeing.com.

Abstract: This work evaluated the conductive anodic filament (CAF) growth susceptibility of three common PWB laminate systems under eutectic SnPb reflow and Pb-free reflow conditions. CAF test boards fabricated from three resin types were reflowed five times, following either eutectic Sn-Pb or Pb-free profiles. The boards were subjected to a 600 hr., 100VDC CAF test. CAF failures were detected in all laminates and in both reflow profiles. Pb-free processing caused twice the CAF failures than did eutectic SnPb processing. Pb-free epoxy laminate was substantially more CAF-resistant than either high Tg epoxy or polyimide. CAF failures were strongly dependant on hole spacing, and ranged from nearly 0% at 0.035˝ (0.089 cm) hole spacing to 100% at 0.010˝ (0.025 cm) hole spacing, regardless of laminate type. (SMTAI, October 2009)

Nanotechnology

“All Optical Metamaterial Circuit Board at the Nanoscale”

Authors: Andrea Alů and Nader Engheta, andreaal@ee.upenn.edu.

Abstract: Optical nanocircuits may pave the way to transformative advancements in nanoscale communications. This work introduces the concept of an optical nanocircuit board, constituted of a layered metamaterial structure with low effective permittivity, over which specific traces that channel the optical displacement current may be carved out, allowing the optical “local connection” among “nonlocal” distant nanocircuit elements. This may provide “printed” nanocircuits, realizing an all-optical nanocircuit board over which specific grooves may be nanoimprinted within the realms of current nanotechnology. (Physical Review Letters, September 2009)

Boundary scan’s low-cost and IC level access has pushed its use beyond traditional board test applications.

Boundary scan is a method for testing interconnects on PCBs or sub-blocks inside an integrated circuit. It has rapidly become the technology of choice

for building reliable high technology electronic products with a high degree of testability.

Boundary scan, as defined by IEEE Std. 1149.1 developed by the Joint Test Action Group (JTAG), is an integrated method for testing interconnects on PCBs that are implemented at the IC level. The inability to test highly complex and dense printed circuit boards using traditional in-circuit testers and bed-of-nails fixtures was evident in the mid 1980s. Due to physical space constraints and loss of physical access to fine pitch components and BGA devices, fixturing cost increased dramatically, while fixture reliability decreased at the same time.

The boundary scan architecture provides a means to test interconnects and clusters of logic, memories, etc., without using physical test probes. It adds one or more so-called “test cells” connected to each pin of the device that can selectively override the functionality of that pin. These cells can be programmed via the JTAG scan chain to drive a signal onto a pin and across an individual trace on the board. The cell at the destination of the board trace can then be programmed to read the value at the pin, verifying the board trace properly connects the two pins. If the trace is shorted to another signal or if the trace has been cut, the correct signal value will not show up at the destination pin, and the board will be known to have a fault.

When performing boundary scan inside ICs, cells are added between logical design blocks to be able to control them in the same manner as if they were physically independent circuits. For normal operation, the added boundary scan latch cells are set so they have no effect on the circuit, and are therefore effectively invisible. However, when the circuit is set into a test mode, the latches enable a data stream to be passed from one latch to the next. Once the complete data word has been passed into the circuit under test, it can be latched into place. Since the cells can be used to force data into the board, they can set up test conditions. The relevant states can then be fed back into the test system by clocking the data word back so that it can be analyzed.

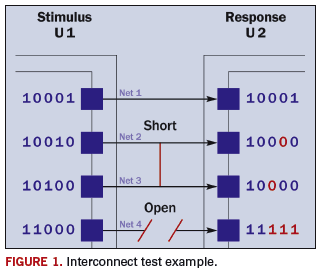

The principles of interconnect test using boundary scan are illustrated in Figure 1, depicting two boundary scan-compliant devices, U1 and U2, which are connected with four nets. U1 includes four outputs that are driving the four inputs of U2 with various values. In this case, we will assume the circuit includes two faults: a short between Nets 2 and 3, and an open on Net 4. We will also assume a short between two nets behaves as a wired-AND, and an open is sensed as logic 1. To detect and isolate the above defects, the tester is shifting the patterns shown in Figure 1 into the U1 boundary scan register and applying these patterns to the inputs of U2. The input values of U2 boundary scan register are shifted out and compared to the expected results. In this case, the results (marked in red) on Nets 2, 3, and 4 do not match the expected values and, therefore, the tester detects the faults on Nets 2, 3 and 4.

By adopting this technique, it is possible for a test system to gain test access to a board. As most of today’s boards are very densely populated with components and traces, it is very difficult for test systems to access the relevant areas of the board to enable them to test the board. Boundary scan makes this possible.

Debugging. While it is obvious that boundary scan-based testing can be used in the production phase of a product, new developments and applications of IEEE-1149.1 have enabled the use of boundary scan in many other product lifecycle phases. Specifically, boundary scan technology is now applied to product design, prototype debugging and field service.

A large proportion of high-end embedded systems has a JTAG port. ARM [Advanced RISC (reduced instruction set computer) Machine] processors come with JTAG support, as do most FPGAs (field-programmable gate arrays). Modern 8- and 16-bit microcontroller chips, such as Atmel AVR and TI MSP430 chips, rely on JTAG to support in-circuit debugging and firmware reprogramming (except on the very smallest chips, which don’t have enough pins to spare and thus rely on proprietary single-wire programming interfaces).

The PCI (Peripheral Component Interconnect) bus connector standard contains optional JTAG signals on pins 1 to 5; PCI-Express contains JTAG signals on pins 5 to 9. A special JTAG card can be used to re-flash corrupted BIOS (Basic Input/Output System). In addition, almost all complex programmable logic device (CPLD) and FPGA manufacturers, such as Altera, Lattice and Xilinx, have incorporated boundary scan logic into their components, including additional circuitry that uses the boundary scan four-wire JTAG interface to program their devices in-system.

ACI Technologies Inc. (www.aciusa.org) is a scientific research corporation dedicated to the advancement of electronics manufacturing processes and materials for the Department of Defense and industry. This column appears monthly.

Solar Power International brought familiar names, faces and memories.

With the solar energy market expected to expand as rapidly in the upcoming decade as SMT did in the 1980s and ’90s, many parallels are being drawn between the two, and some familiar names and faces from electronics manufacturing are now appearing in the solar sector.

Like at Solar Power International 2009, for instance. America’s largest solar energy conference and expo, SPI, was held at the Anaheim convention center in late October. With over 24,000 attendees and 900 exhibitors, the atmosphere was reminiscent of the Nepcon shows during the boom years of SMT.

Recognized leaders in the SMT supply chain exhibiting at SPI included BTU, Flexlink, Datapaq, Christopher Associates, Henkel, Cookson and Hisco. These suppliers have identified applications for their core technologies in the photovoltaic (PV) market. Some have made minimal changes to current products, while others have developed new offerings tailored specifically to the demands of this emerging technology.

BTU (btu.com) has long been recognized as a leader in reflow processing for its high thermal transfer efficiency ovens. But before its first reflow oven was even designed, BTU was already serving the solar market. Atmospheric pressure chemical vapor deposition (APCVD) technology was developed in the 1980s to deposit anti-reflective coatings on glass panels. The process is still used today, and BTU’s lineup has expanded to include newer, more varied equipment that supports both silicon and thin-film PV technologies. With 50 to 60% of BTU’s business now in the solar sector, vice president of sales and marketing Jim Griffin suggests that in order to maintain a successful business position, “product diversity is an absolute necessity, and an organization must keep their presence global.” He credits his organization’s growth in solar to its ability to leverage its worldwide support infrastructure, which includes manufacturing facilities and applications labs in the US and Shanghai.

Flexlink (flexlink.com), known to electronics manufacturers for its configurable conveyor systems, also provides material handling systems to solar assemblers. Photovoltaic panels are both heavy and delicate, so Flexlink combined expertise in moving delicate circuit boards and heavy, palletized industrial assemblies to design low-vibration, scalable solar panel handling systems. According to Michael Hilsey, Flexlink’s director of marketing and indirect sales, moving into this new market was a “natural progression,” which is why it claims the largest share of material handling systems in the solar manufacturing industry.

Datapaq (datapaq.com), providers of thermal data loggers for reflow characterization, now offers similar products designed for solar manufacturing processes. Some of the modifications to the reflow logging gear include protective shells that can protect the loggers at temperatures up to 800°C and withstand vacuum pressures equivalent to 300 lbs. of force. The six-channel systems run sample rates of up to 20 datapoints per second to monitor ramp rates as steep as 100°C per second. Now that’s hot.

Christopher Associates (christopherweb.net), best known to assemblers for bringing the revolutionary Koh Young solder paste inspection systems to America, now offers a full lineup of solar manufacturing equipment and materials. Matt Holtzman, Christopher’s president, says he has applied the lessons learned through his 30 years of international circuit fabrication and assembly experience to the solar manufacturing world. Having witnessed the multiple booms, busts and consolidations in the circuits industry, he predicts similar movement in the solar manufacturing domain, and is prepared for them. His strategy: “to provide the inevitable differentiator in a developing market – service.” Christopher provides a full lineup of both equipment and materials, most of which are imported from Asian countries, which now lead the global value proposition in solar technology.

On the materials side of the market, Henkel (henkel.com/electronics) is identifying numerous crossover applications for its products typically used in electronics manufacturing. Similar to its functions in circuits, conductive adhesives replace solder in applications that will not accept solder (like glass, for example); silver flake filled epoxies work as grounding die attach materials; low-residue rosin-free fluxes are used in automated soldering equipment; thermal management materials are used to sink heat, and conformal coatings protect assemblies against harsh environments.

Cookson (alphametals.com/products/photovoltaic) is capitalizing on its expertise in soldering materials to gear new products to the process demands of solar cell assembly. Designed specifically for tabbing and stringing operations, ultra-low solids liquid fluxes have been designed to minimize maintenance and activate very quickly, because soldering cycle times are incredibly fast – often less than two seconds. Addressing the process concern of flux overspray and its associated maintenance costs, Cookson has developed a dry flux that is applied directly to the solder ribbon. Dubbed “Ready Ribbon,” the solder-covered copper ribbon is pre-coated with a dry flux, which eliminates the need for spraying, and therefore, cleaning. Steve Cooper, Cookson director of global business development, said, “Since its introduction earlier this year, we’ve seen a significant interest, particularly from module manufacturers.”

Hisco (hiscoinc.com/solar), known for its extensive distribution network in North America, is positioned to support all facets of PV assembly from module manufacturing through installation. Its end-to-end approach is based on distribution experience in the electronics market, and it couples extensive product offerings with service. Boasting over 100 certified professionals on staff, Tim Gearhart, manager of Hisco’s Silicon Valley branch, says, “Having experts who can help solar manufacturers optimize their processes is one of our greatest strengths.” Hisco’s website illustrates the broad range of photovoltaic technologies and the numerous areas supported by his organization.

It’s no surprise to see leaders in SMT assembly taking leadership positions in PV assembly, and it will be no surprise to see many followers tagging along as the industry prepares for explosive growth. What will separate the leaders from followers in the brave new world of solar manufacturing? The same characteristic that has differentiated them for years in the SMT world: customer focus.

Chrys Shea is founder of Shea Engineering Services (sheaengineering.com); chrys@sheaengineering.com.

The test for cleanliness is challenged by numerous subjective assessments.

Solubility is a funny thing, but on the other hand easily explained. A liquid can solubilize based on its physical properties such as polarity, intermolecular interactions, temperature and other key features.

Recently, I took part in a presentation that made the case that an extraction with IPA-water at 176°C would provide superior ion chromatography results when injected (compared to room temperature). Thankfully, I have spent years in an organic chemistry lab. Despite the fact that our resources were limited at the time, we were able to find spare parts and assemble a number of chromatography instruments to further our research on asymmetric catalysis. I noticed the chromatography columns were sensitive to temperature and solvents. In other words, the extracted products were never injected onto the column at temperatures higher than room temperature. We had to make sure the solubility of our organic molecules was 100% to obtain accurate data on reaction result and yield (i.e., enantiomeric excess). Why is this important, you might wonder?

Numerous companies are investigating viable alternatives to IPA and water (currently in use for ROSE test, IPC-TM-650 methods 2.3.25.1 and 2.3.28, and MIL-P-28809 section 4.8.3). One might state that heating IPA and water temporarily increases the solubility of the contamination. However, lowering the temperature will make this reaction reversible. During a chromatography experiment, the respective results from heated extraction, therefore, will not be any better than ionic contamination measurements acquired at room temperature.

While people like stability and are generally resistant to change, we have to continue to acknowledge that our analytical assessments today are far from satisfactory or correct. Not only did we author numerous articles to raise public awareness, but we also initiated various internal projects to further the possibilities of developing a new extraction method that would not only dissolve today’s flux residues, but also produce numeric data far superior to frequently used ion chromatography and ionic contamination. (Please understand that we do support the latter methods and use them daily in our labs. My main point simply is why not look for something better?)

Related to this area of improvement, the test for cleanliness via ion chromatography is also challenged by other subjective assessments. During each and every analysis, the extracted liquid is injected onto the various columns to detect ions and cations. This results in quantitative numbers, which still require a correlation to “cleanliness.” Here subjectivity comes into play. The values are, in most cases, correlated to the “personal experiences” made by each of the analytical labs performing this test. My question is: Who is to judge whether a certain sodium (Na+), potassium (K+) or weak organic acid (ROO-) means clean or not clean? Further, I do not think there is a true relationship/correlation possible between long-term reliability and a certain ion level. Assumptions can be made, but users have to be aware that each assembly is so different in nature that one can hardly find a numerical value applicable to each user and industry.

For example, consider a highly complex populated board compared to a less populated board with widely spaced components. The risk of electrochemical migration or corrosion might be given in the first case, but the same level of ions may not harm the second board. This problem is compounded by the fact that analytical labs are hard-pressed to relate the environmental exposure/application to the individual assemblies. An assembly might not fail in a dry environment, but would fail once used under humid conditions. One can continue similar examples for some time.

Imagine for a moment the conundrum presented to the industry. While we are searching to find a better solvent than IPA/water, we also have to honestly ask what impartial (non-vendor based) method will be used to employ it, and finally, how best to find a numerical value for the ultimate cleanliness testing method that is representative and can be applied by most industry users. I assume that to overcome this dilemma, research will eventually lead to a combination of test methods that will provide the best overall estimate of the risk associated with your assembly.

Research is currently underway to provide viable, general replacement solutions that can and should be evaluated and compared to the present method.

Harald Wack, Ph.D., is president of Zestron (zestron.com); h.wack@zestronusa.com. His column appears regularly.